2011-ML

These are my notes from Andrew Ng's ML class. A course on Machine Learning.

- ML class overview for an overview

- ML class journal for my journal

Course undertaken October to December 2011.

Week 1

INTRODUCTION

Welcome

Machine learning is an exciting recent technology. In this class we learn about state of the art and gain practice implementing and deploying ML algorithms.

Examples of ML algorithms in practice include:

- Search engines like Google and Bing ranking web-pages

- Facebook or Apple's photo-tagging application

- Spam filtering

The dream of ML is to create machines that are as intelligent as humans, but this goal is a long way off. Many researchers believe that the best way to that goal is to come up with learning algorithms that mimic how the human brain learns.

In this class you learn about state of the art machine learning algorithms. But knowing the algorithms and the math isn't much good without also knowing how to get the stuff to work with problems that you care about. So we also do exercises.

Why is machine learning prevalent today? Machine learning grew out of work in AI and is a new capability for computers. We know how to program computers to do well defined things like finding the shortest path between A and B, but we didn't know how to do more sophisticated things like ranking web pages, identifying friends in pictures, or filtering spam. There was a realisation that the only way to do these things was to have the machine learn do it by itself.

Machine learning is used widely in industry.

Examples of machine learning:

- Database mining

- Large datasets from growth of automation/web.

- clickstream data

- medical records

- biology

- engineering

- Large datasets from growth of automation/web.

- Applications that can't be programmed by hand

- autonomous helicopter

- handwriting recognition

- most of Natural Language Processing (NLP)

- Computer Vision

- Self-customising programs

- Amazon

- Netfilx product recommendations

- Understanding human learning (brain, real AI)

There are more recruiters contacting the lecturer than there are graduates of machine learning courses.

You will learn machine learning algorithms and terminology and start to get a sense when each one may be appropriate.

What is Machine Learning?

Here we get a define what machine learning is and try to give a sense of when it might be appropriate. There isn't a well accepted definition of what is and what is not machine learning. Here are some examples of attempts at definition:

- Arthur Samuel (1959). Machine Learning: Field of study that gives computers the ability to learn without being explicitly programmed.

Samual's claim to fame was that in the 1950s he created a checkers playing program. He had the program play tens of thousands of games against itself, and by watching what sort of board positions tended to lead to wins, and what sort of board positions tended to lead to losses, the program learned what are good and bad positions were.

- Tom Mitchell (1998). Well-posed Learning Problem: A computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on on T, as measured by P, improves with experience E.

From the checkers example:

- E: experience playing 10,000s games against itself

- T: task of playing checkers

- P: the probability that it wins the next game of checkers against a new opponent

There will be questions throughout the videos.

There are several different types of ML algorithms. The two main types are:

- Supervised learning

- teach computer how to do something

- Unsupervised learning

- computer learns by itself

Other types of algorithms are:

- Reinforcement learning

- Recommender systems

We will also learn practical advice for applying learning algorithms. Algorithms are like tools, you don't only need to have the tools but you also need to know how to use those tools.

Supervised Learning

This is probably the most common type of machine learning problem.

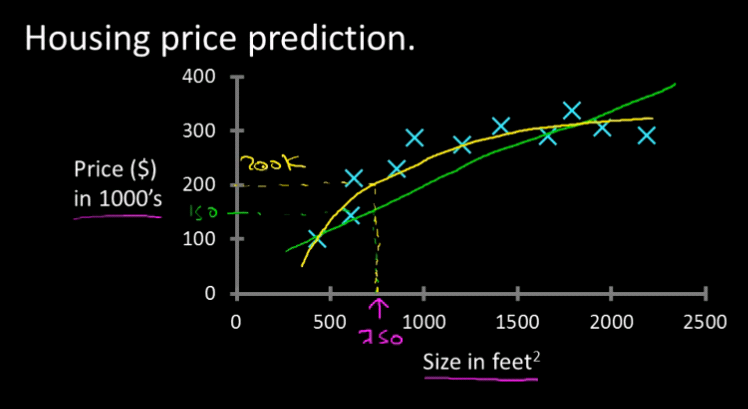

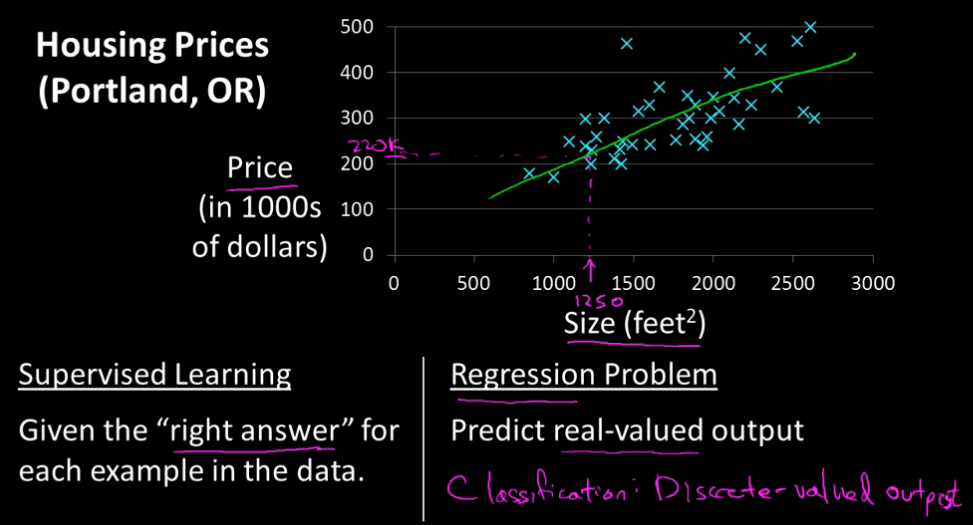

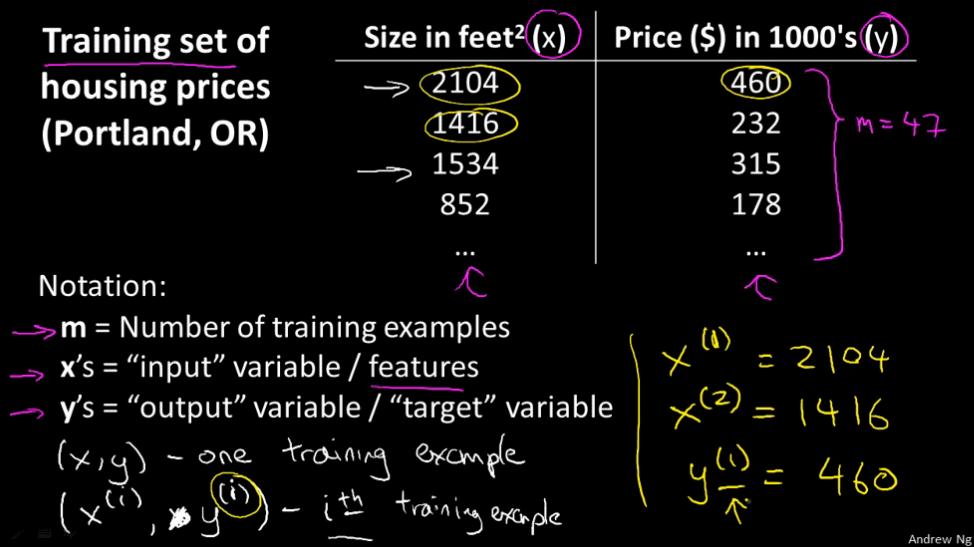

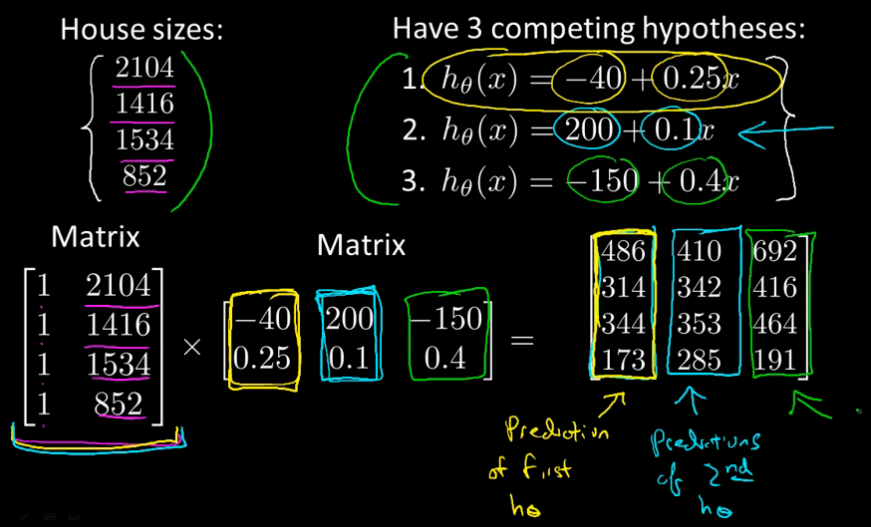

E.g. housing price prediction from a dataset with price sold for the size in square-feet. Maybe you could fit a straight line to the data, or maybe you could fit a quadratic function (a second order polynomial). One of the things we'll talk about later is how to choose whether you want to fit a straight line to the data or a quadratic function to the data.

Supervised Learning is an algorithm in which the "right answers" are given.

As in our example where we had a dataset with a known sale price and size in feet.

This is known as a Regression problem.

Regression: predict continuous valued output (price).

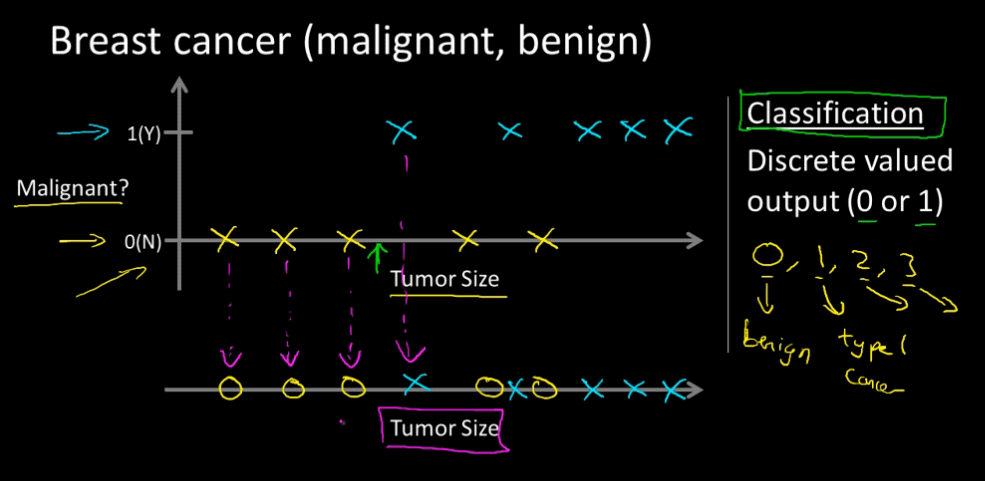

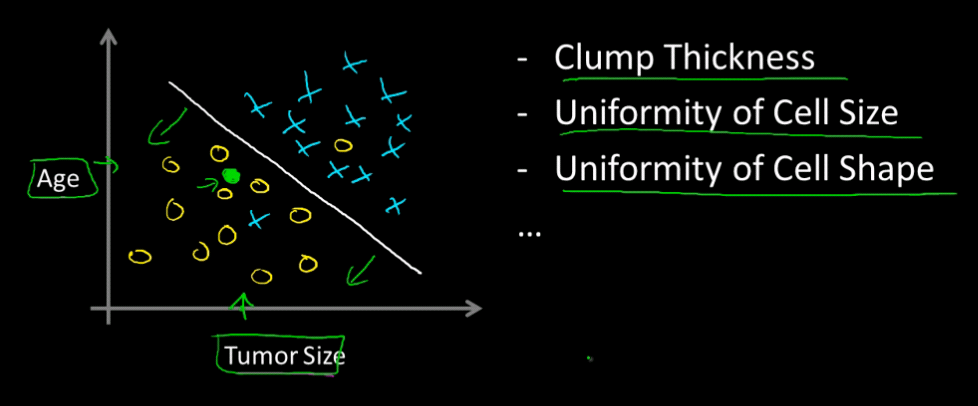

Another example is breast cancer prediction (malignant, benign).

This type of problem as a discrete valued output (e.g. 0 or 1) and is known as a Classification problem. You can have more than 2 discrete values in a classification problem.

Classification: discrete valued output (e.g. 0 or 1)

In this problem we use Tumour Size to determine malignancy, but we might use more than one feature (attribute). For example, we might know Age and Tumour Size.

Unsupervised Learning

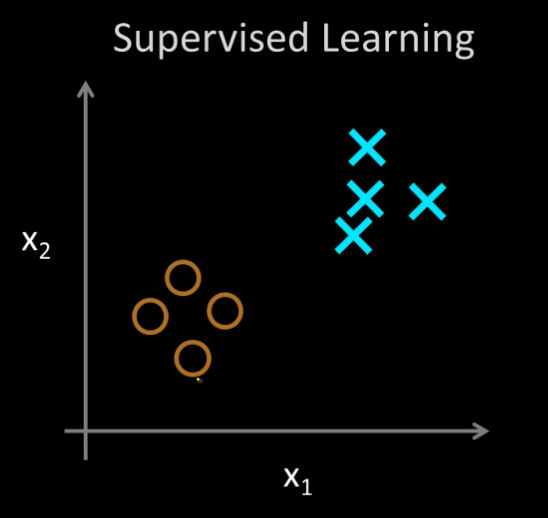

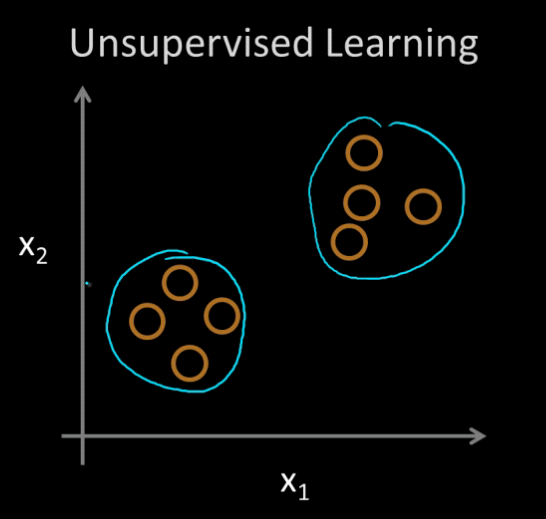

The difference here is that whereas in supervised learning the categories are known (circle or cross) in unsupervised learning the categories are unknown.

An unsupervised learning algorithm might be able to break the data into clusters, for example:

This is called a Clustering Algorithm and it turns out to be used in many places.

One example of where clustering used is in Google News. What Google News does is that every day it looks at tens or hundreds of thousands of news stories and then it groups them into cohesive news stories. You can click on different links to different related stories. News stories on the same topic are grouped together.

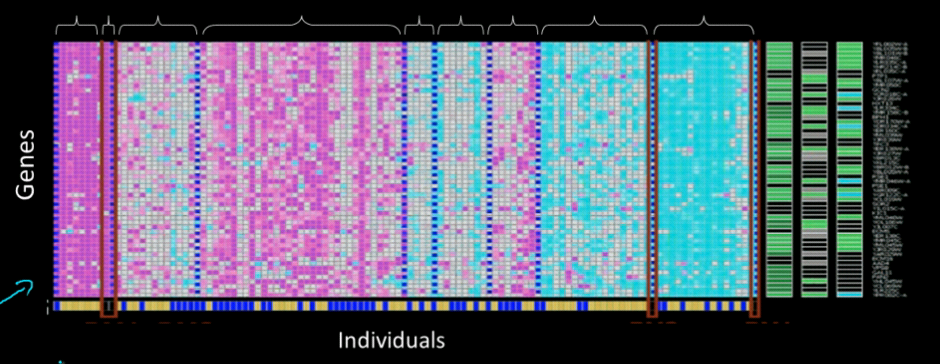

Here is a graph that shows what degree genes are expressed:

Here the algorithm automatically finds structure in the data by placing people into categories.

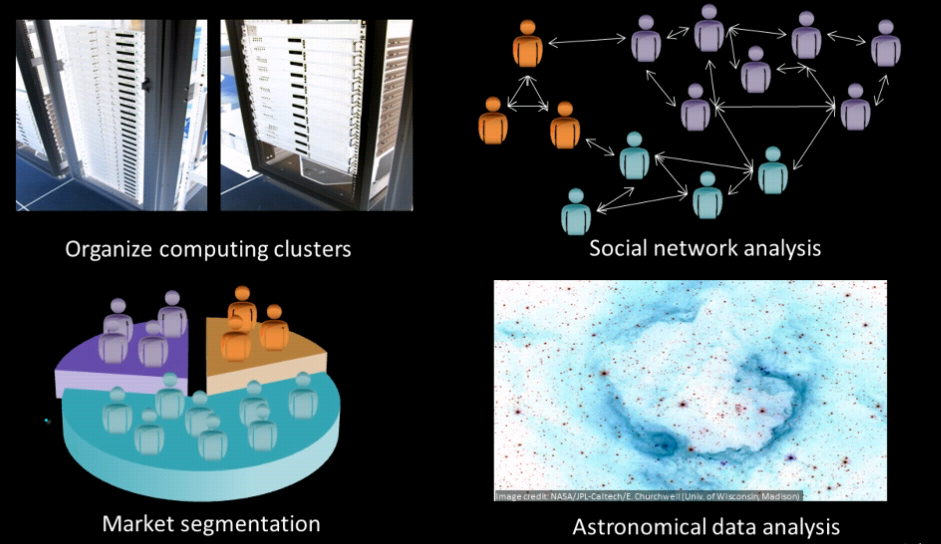

Unsupervised learning, or clustering, is used in a bunch of other applications:

Clustering is just one example of an unsupervised learning problem. Another example is found in the "Cocktail party problem" where two people are heard talking at the same time and the algorithm can separate the two audio sources given recordings from two microphones. The audio is separated using the following line of code:

[W,s,v] = svd((repmat(sum(x.*x,1),size(x,1),1).*x)*x');

We're going to be using the Octave programming environment in this course. Octave is free open source software. Using tools like Octave or MatLab many learning algorithms become just a few lines of code. We will be using Octave (or MatLab if you have it) during this course.

It's typical to prototype software in Octave because it's quick and easy to do that. You can implement in C++ or Java or Python it's just much more complicated to do so. If you use Octave as your learning and prototyping tool you will learn the algorithms more quickly.

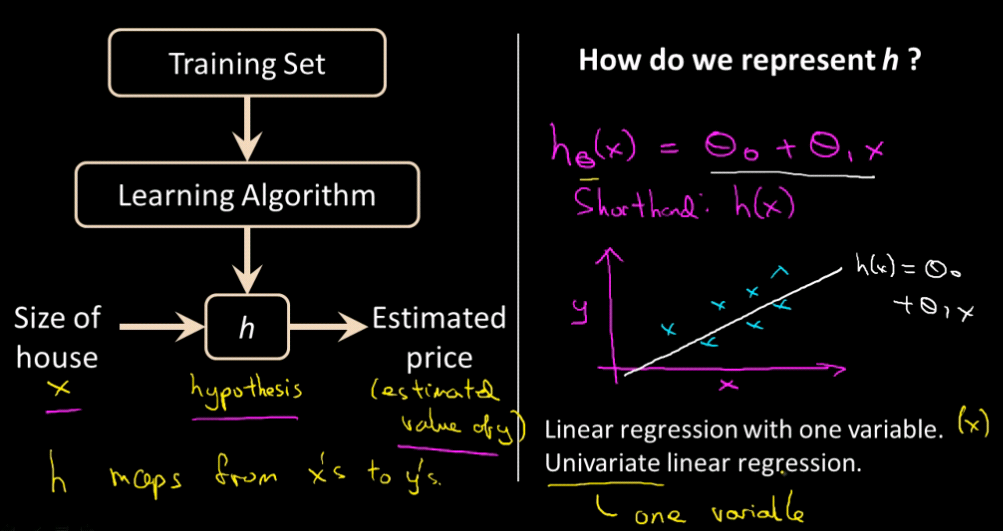

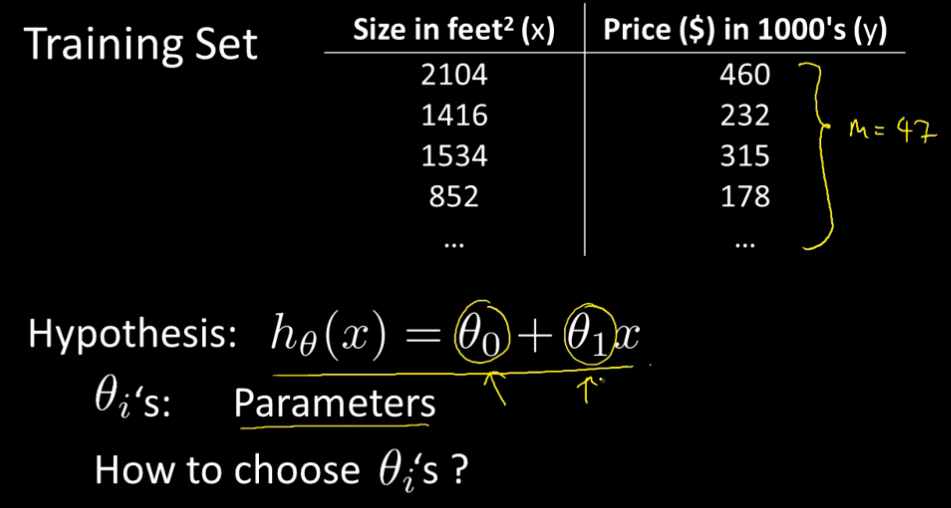

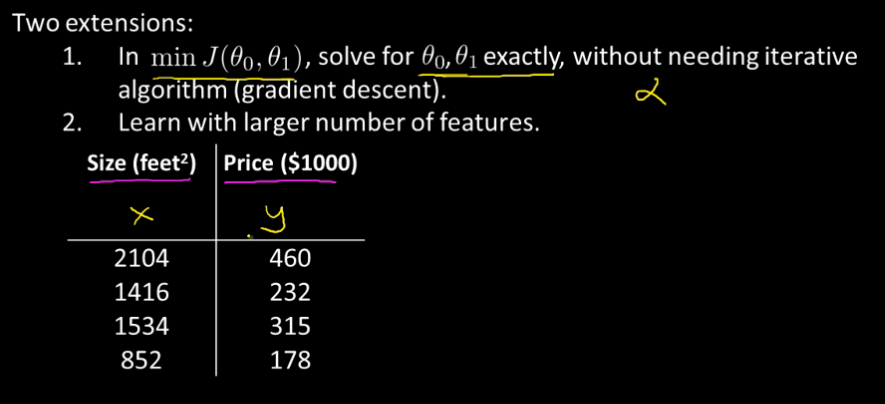

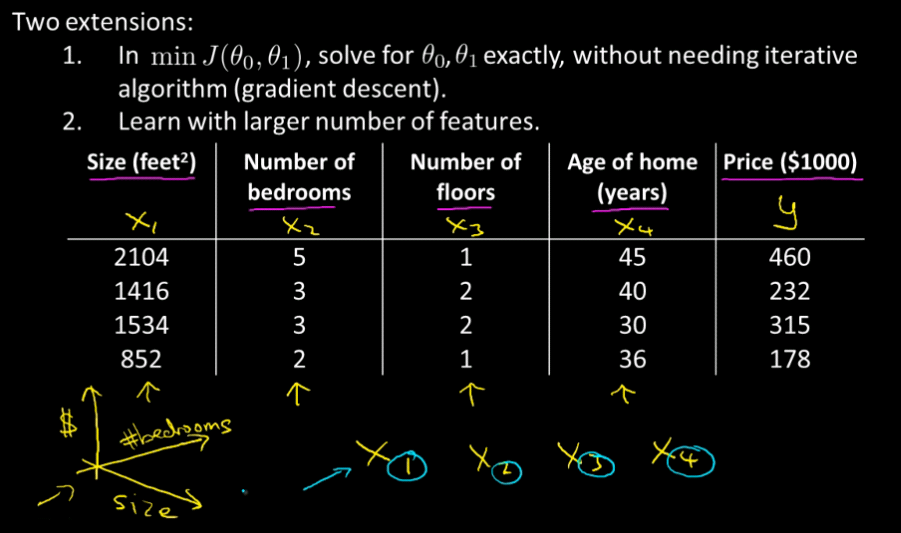

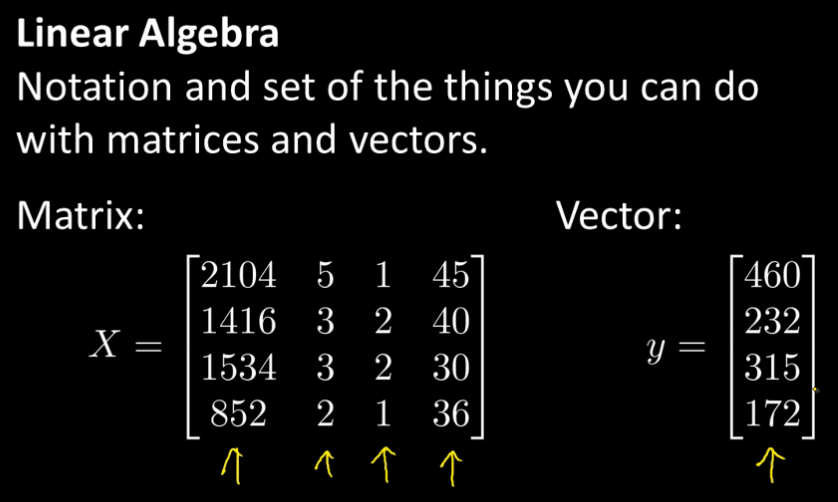

LINEAR REGRESSION WITH ONE VARIABLE

Model Representation

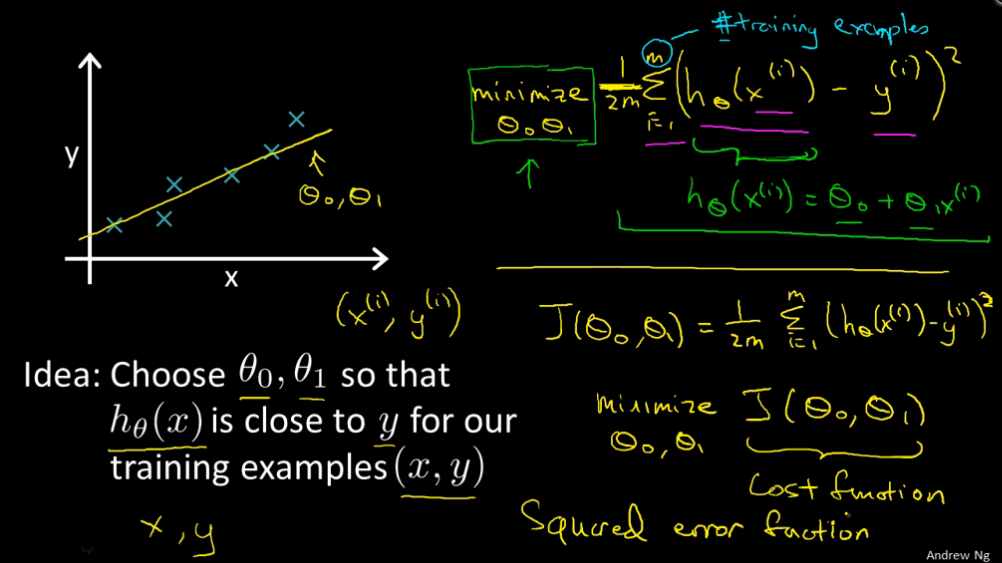

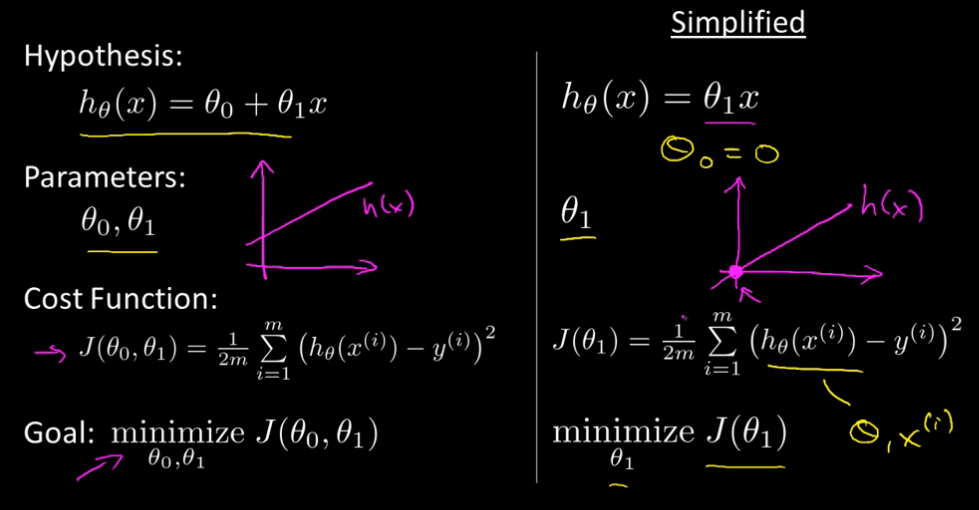

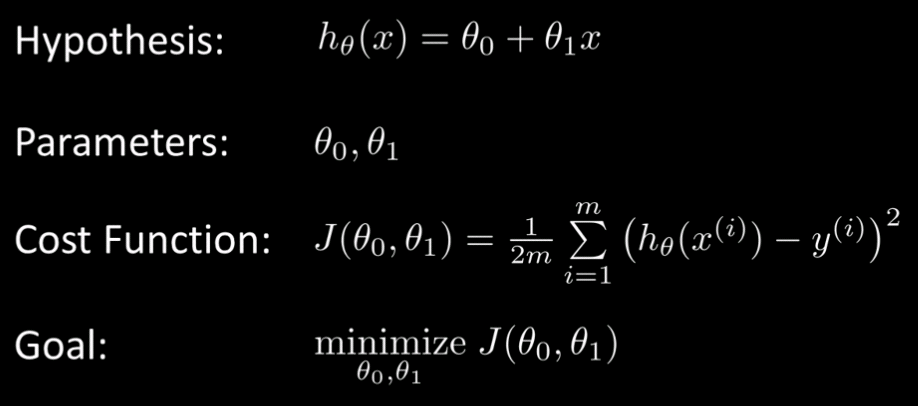

Cost Function

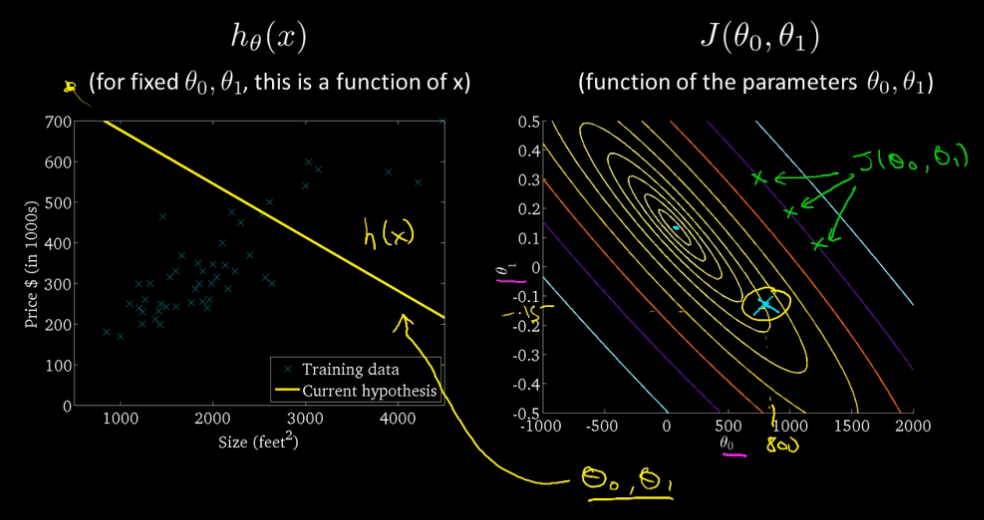

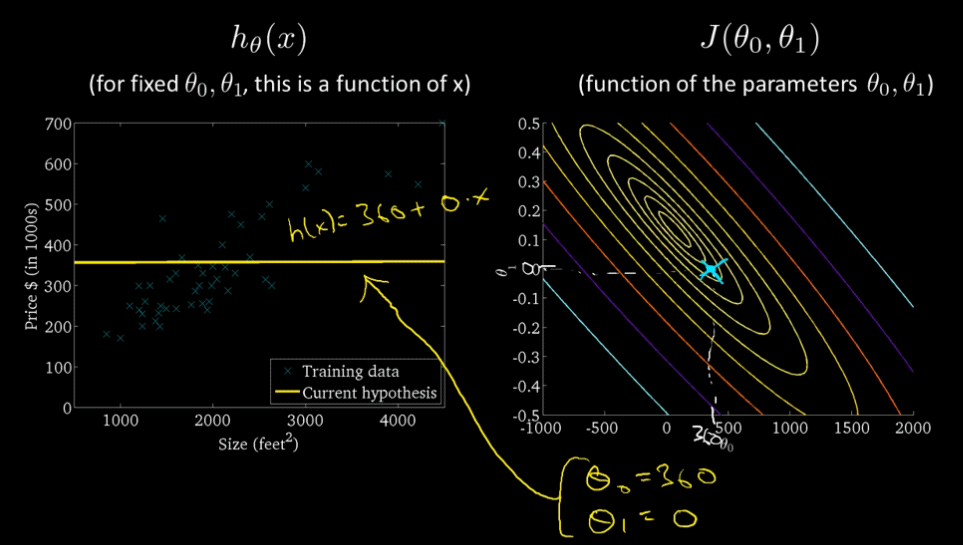

Here we define something called the Cost Function -- this will help us figure out how to fit the best possible straight line to our data.

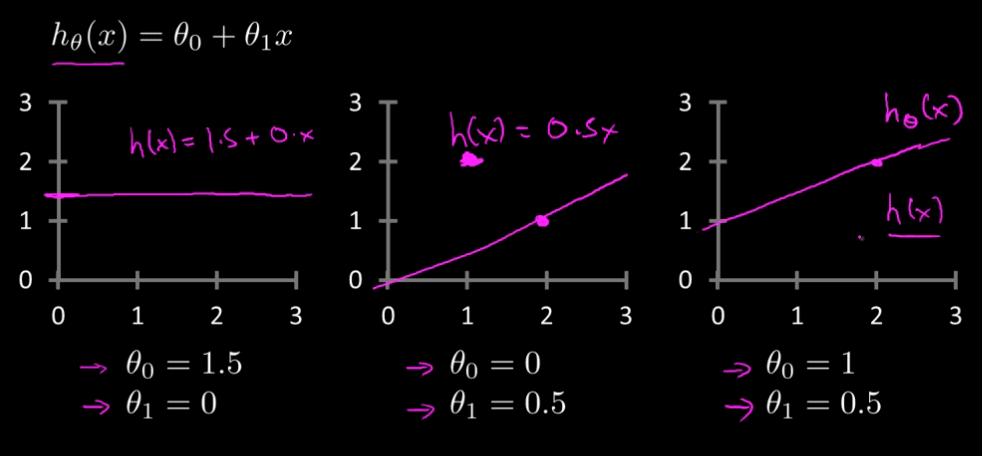

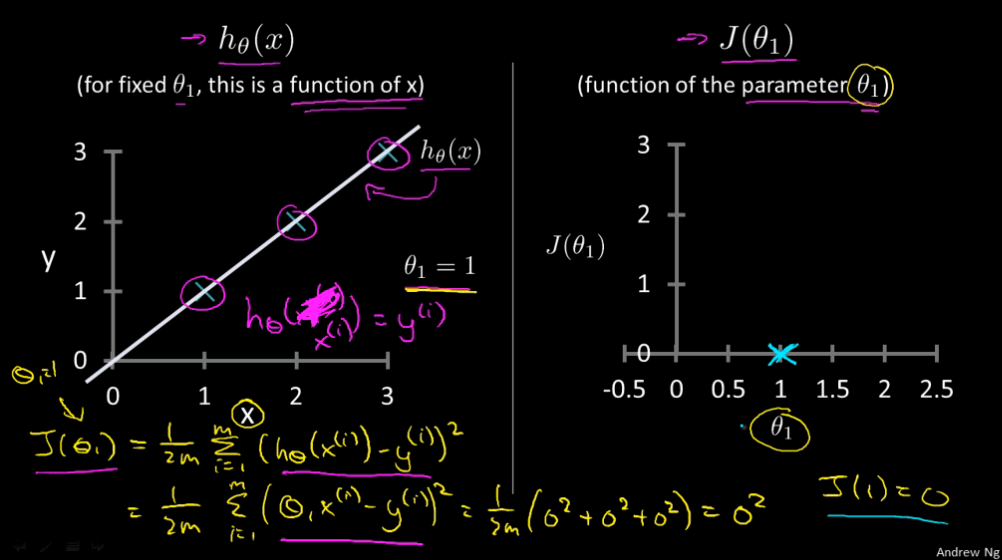

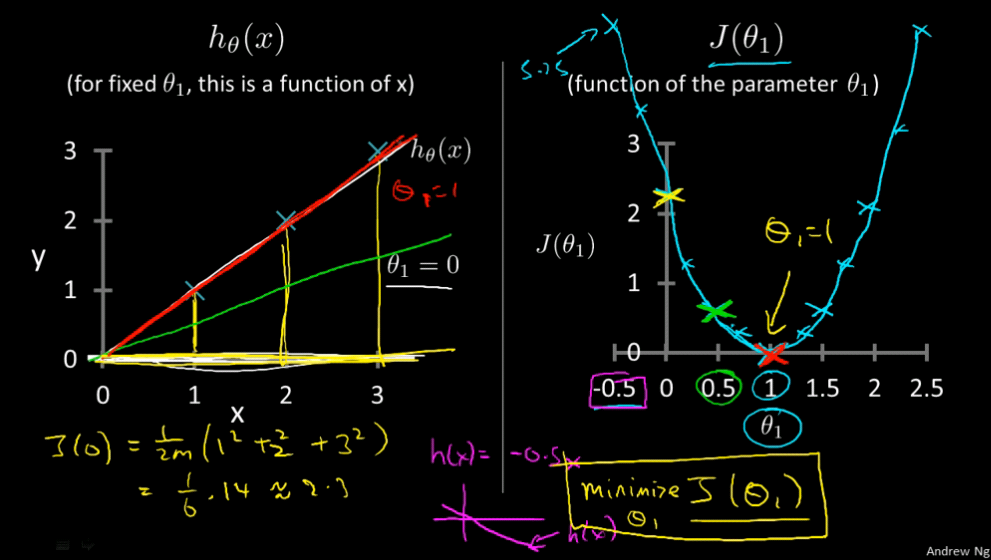

Cost Function - Intuition I

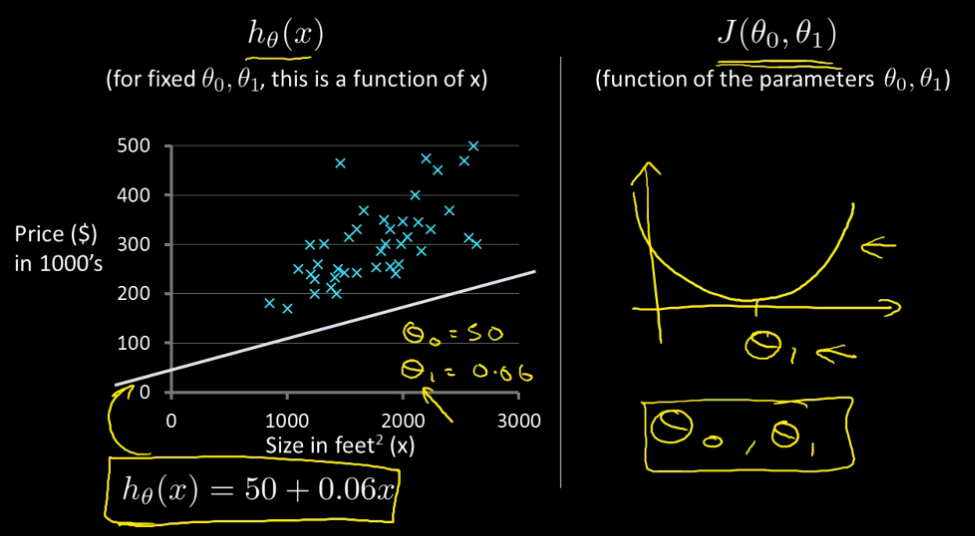

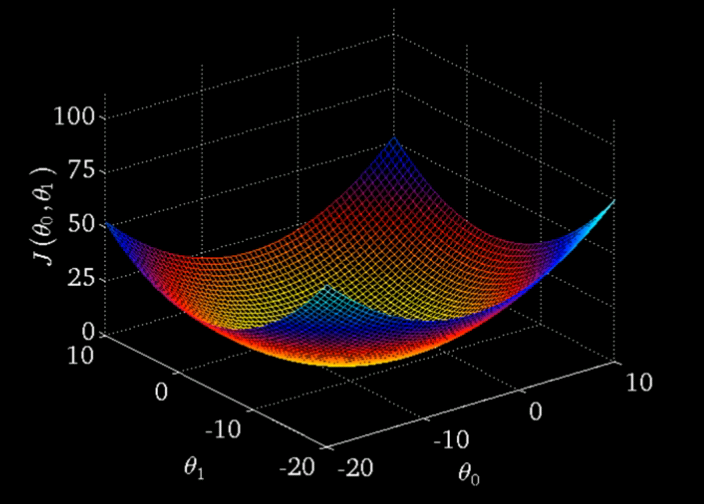

Cost Function - Intuition II

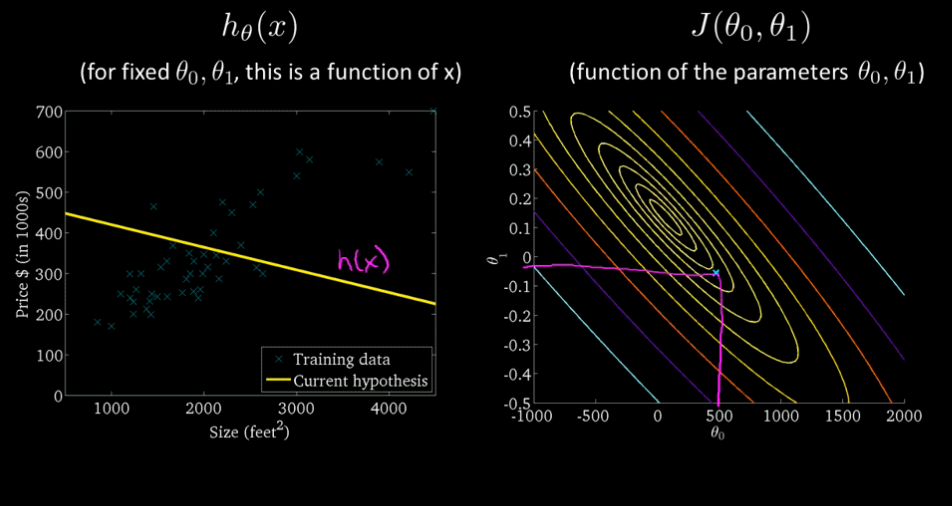

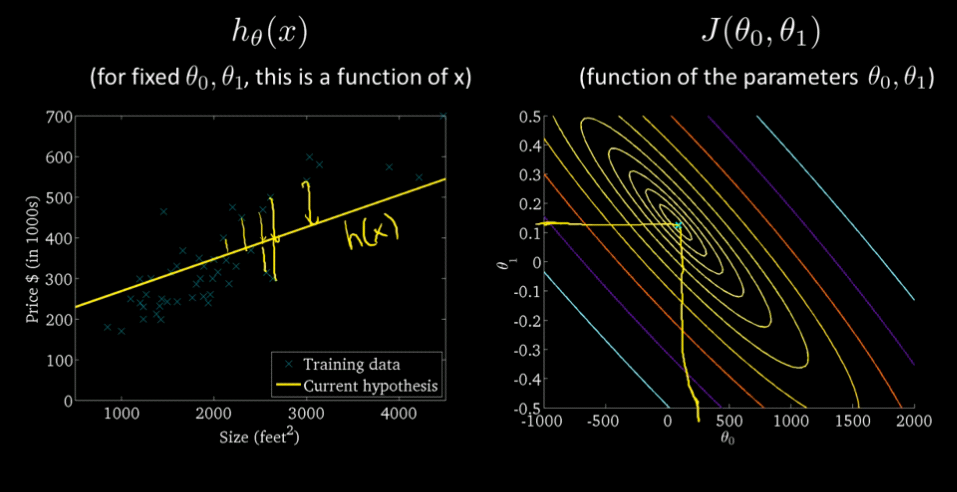

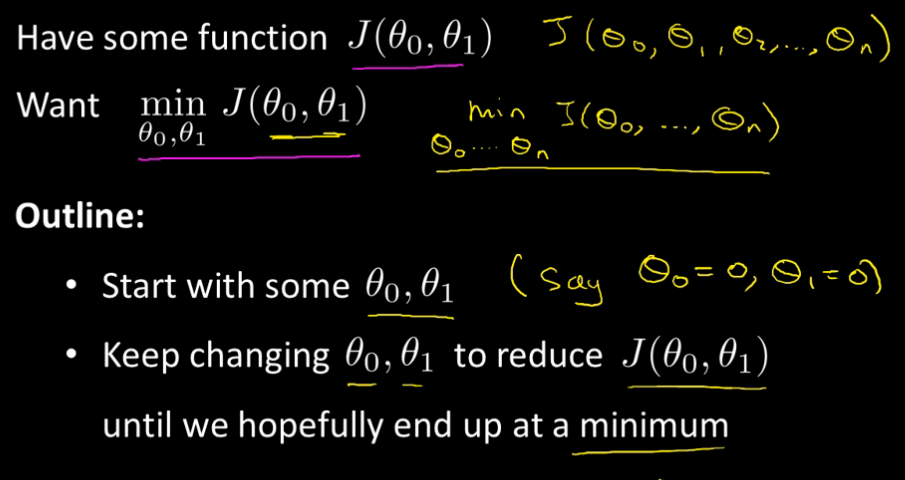

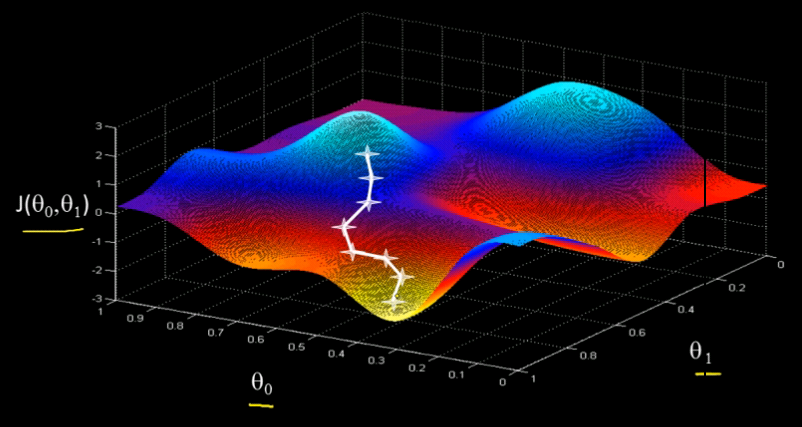

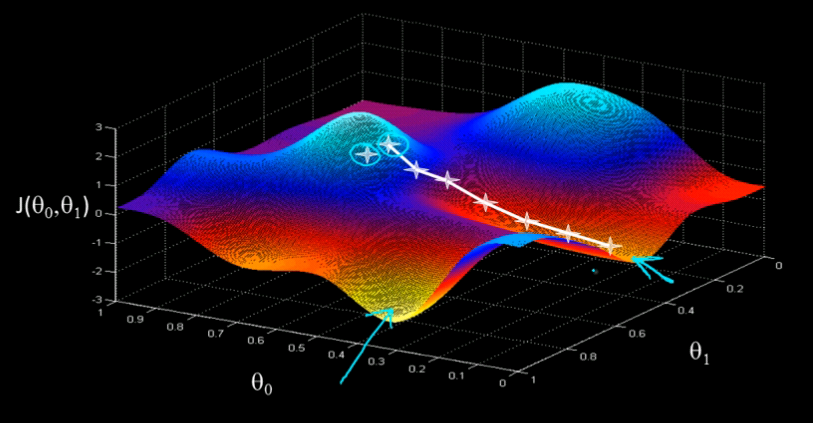

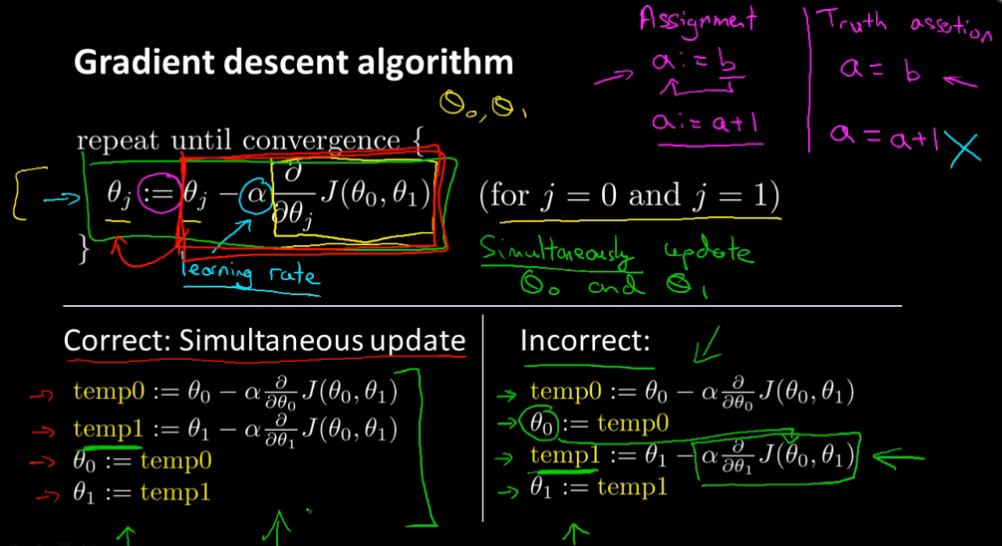

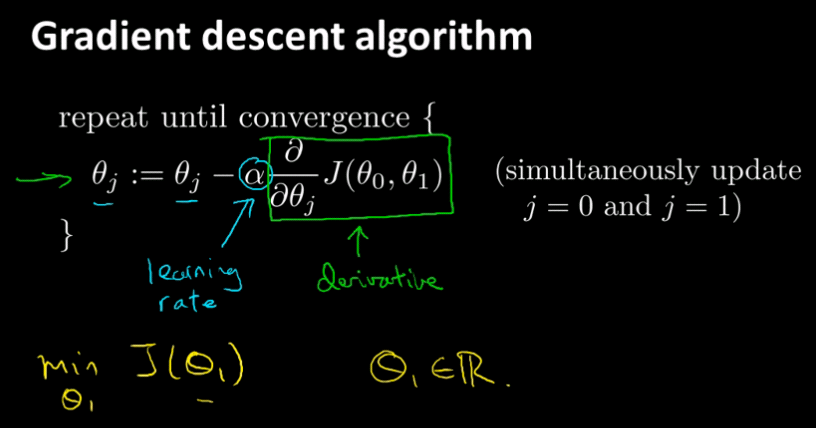

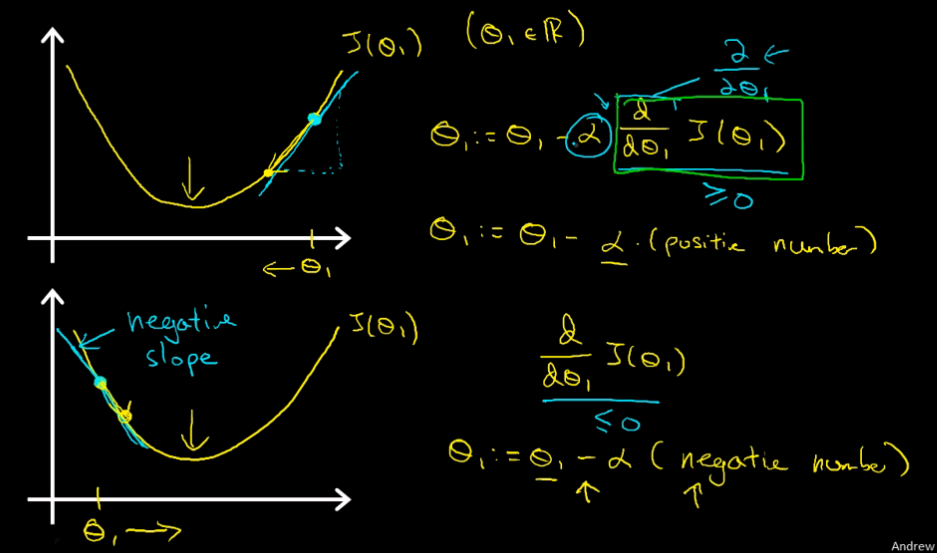

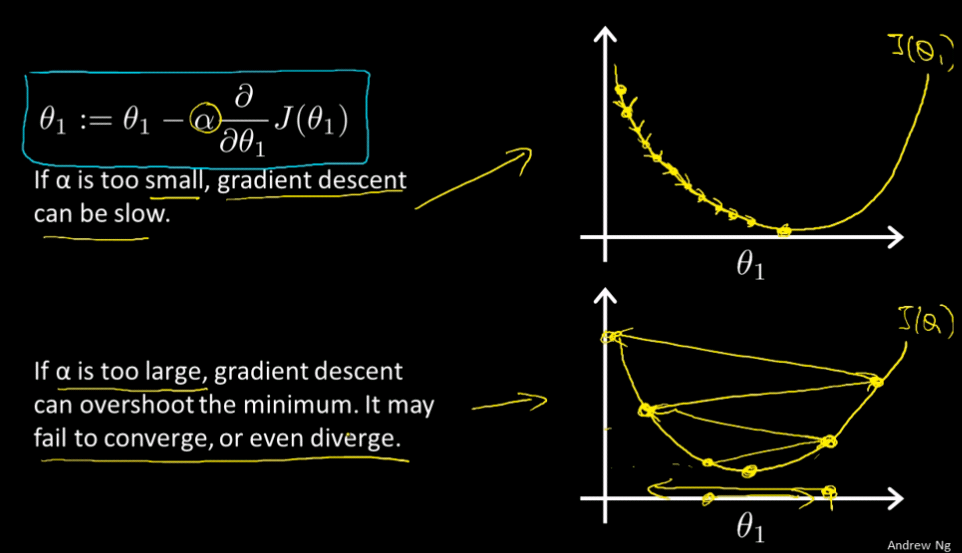

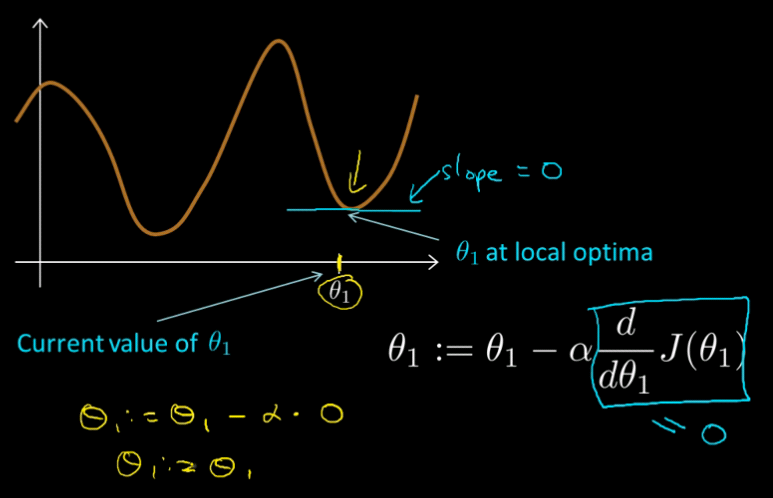

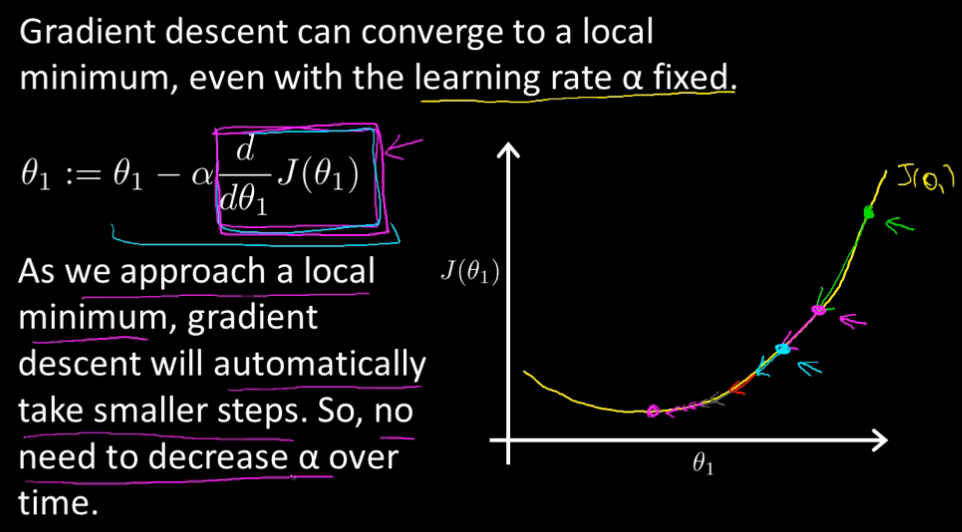

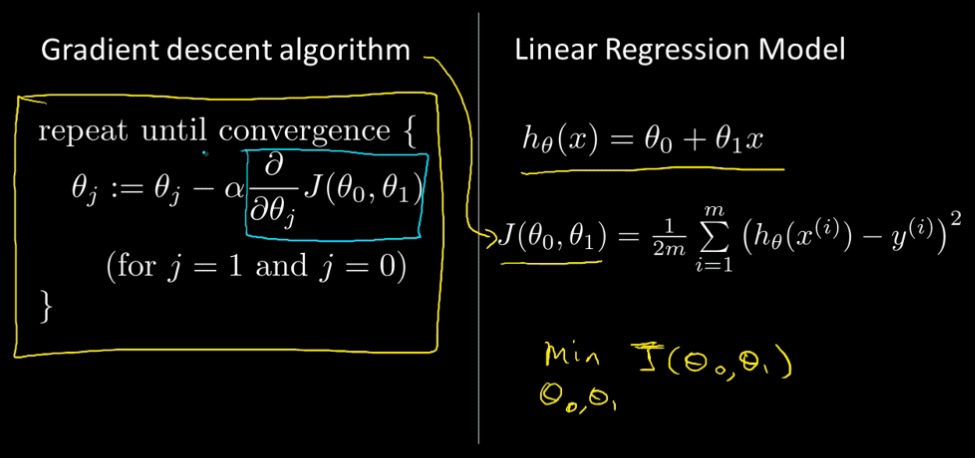

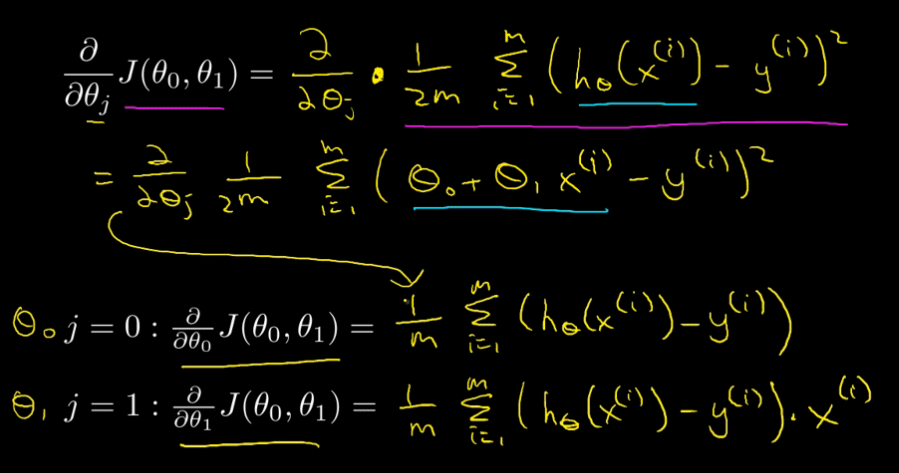

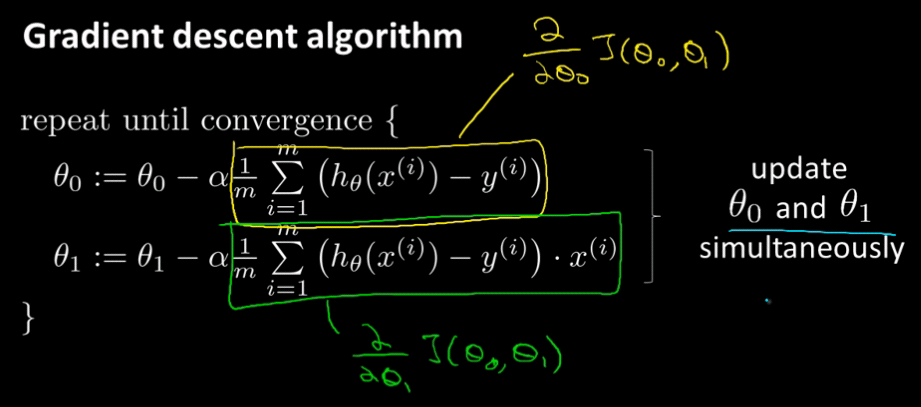

Gradient Descent

Gradient Descent is a general algorithm for minimising the Cost Function J. Gradient Descent is used all over the place in Machine Learning not just in Linear Regression. Later in the course we will use Gradient Descent to minimise other functions as well, not just the Cost Function J for Linear Regression.

Gradient Descent algorithms can minimise functions with more parameters than two, e.g. J(Θ0,...,Θn) but here we will focus on two parameters for brevity.