AI class unit 8

These are my notes for unit 8 of the AI class.

Planning

Introduction

Hi, and welcome back. This unit is about planning. We defined AI to be the study and process of finding appropriate actions for an agent. So in some sense planning is really the core of all of AI. The technique we looked at so far was problem solving search over a state space using techniques like A*.

Given a state space and a problem description, we can find a solution, a path to the goal. Those approaches are great for a variety of environments, but they only work when the environment is deterministic and fully observable.

In this unit, we will see how to relax those constraints.

Problem Solving vs Planning

You remember how problem solving worked. We have a state space like this, and we're given a start state and a goal to reach, and then we'd search for a path to find that goal, and maybe we find this path. Now the way a problem solving agent would work, is first it does all the work to figure out the path to the goal just doing by thinking, and then it starts to execute that path, to drive or walk, however you want to get there, from the start state to the end state.

But think about what would happen if you did that in real life. If you did all your planning ahead of time, you had the complete goal, and then without interacting with the world, without sensing it at all, you started to execute that path. Well, this has in fact been studied. People have gone out and blindfolded walkers, put them in a field and told them to walk in a straight line, and the results are not pretty. Here are the GPS tracks to prove it.

So we take a hiker, we put him at a start location, say here, and we blindfold him so that he can't see anything in the horizon, but just has enough to see his or her feet so that they won't stumble over something, and tell them execute the plan of going forward. Put one foot in front of each other and walk forward in a straight line, and these are the typical paths we see. Start out going straight for a while, but then go in loop de loops and end up not at a straight path at all. These ones over here, starting in this location, are even more convoluted. They get going straight for a little bit and then go in very tight loops.

So people are incapable of walking a straight line without any feedback from the environment. Now here on this yellow path, this one did much better, and why was that? Well it's because these paths were on overcast days, and so there was no input to make sense of. Whereas on this path was on a very sunny day, and so even though the hiker couldn't see farther than a few feet in front of him, he could see shadows and say, "As long as I keep the shadows pointing in the right direction then I can go in a relatively straight line."

So the moral is we need some feedback from the environment. We can't just plan ahead and come up with a whole plan. We've got to interleave planning and executing.

Planning vs Execution

Now why do we have to interleave planning and execution? Mostly because of properties of the environment that make it difficult to deal with. The most important one is if the environment is stochastic. That is, if we don't know for sure what an action is going to do.

If we know what everything is going to do, we can plan it out right from the start, but if we don't, we have to be able to deal with contingencies of, say I tried to move forward and the wheels slipped, and I went some place else, or the brakes might skid, or if we're walking our feet don't go 100% straight, or consider the problem of traffic lights. If the traffic light is red, then the result of the action of go forward through the intersection is bound to be different than if the traffic light is green.

Another difficulty we have to deal with is multi-agent environments. If there are other cars and people that can get in our way, we have to plan about what they're going to do, and we have to react when they do something unexpected, and we can only know that at execution time, not at planning time.

The other big problem is with partial observability. Suppose we've come up with a plan to go from A to S to F to B. That plan looks like it will work, but we know that at S, the road to F is sometimes closed, and there will be a sign there telling us whether it's closed or not, but when we start off we can't read that sign. So that's partial observability.

Another way to look at it is when we start off we don't know what state we're in. We know we're in A, but we don't know if we're in A in the state where the road is closed or if we're in A in the state where the road is open, and it's not until we get to S that we discover what state we're actually in, and then we know if we can continue along that route or if we have to take a detour south.

Now in addition to these properties of the environment, we can also have difficulty because of lack of knowledge on our own part. So if some model of the world is unknown, that is, for example, we have a map or GPS software that's inaccurate or incomplete, then we won't be able to execute a straight-line plan.

And similarly, often we want to deal with a case where the plans have to be hierarchical. And certainly a plan like this is at a very high level. We can't really execute the action of going from A to S when we're in a car. All the actions that we can actually execute are things like turn the steering wheel a little bit to the right, press on the pedal a little bit more. So those are the low-level steps of the plan, but those aren't sketched out in detail when we start, when we only have the high-level parts of the plan, and then it's during execution that we schedule the rest of the low-level parts of the plan.

Now most of these difficulties can be addressed by changing our point of view. Instead of planning in the space of world states, we plan in the space of belief states. To understand that let's look at a state.

Vacuum Cleaner Example

Here's a state space diagram for a simple problem. It involves a room with two locations. The left we call A, and the right we call B, and in that environment there's a vacuum cleaner, and there may or may not be dirt in either of the two locations, so that gives us eight total states. Dirt is here or not, here or not, and the vacuum cleaner is here or here. So that's two times two times two is eight possible states, and I've drawn here the states based diagram with all the transitions for the three possible actions, and the actions are moving right, so we'd go from this state to this state, moving left, we'd go from this state to this state, and sucking up dirt, we'd go from this state to this state, for example; and in this state space diagram, if we have a fully deterministic, fully observable world, it's easy to plan. Say we start in this state, and we want to be -- end up in a goal state where both sides are clean. We can execute the suck-dirt action and get here and then move right, and then suck dirt again, and now we end up in a goal state where everything is clean.

Now suppose our robot vacuum cleaner's sensors break down, and so the robot can no longer perceive either which location it's in or whether there's any dirt. So we now have an unobservable or sensor-less world rather than a fully observable one, and how does the agent then represent the state of the world? Well it could be in any one of these eight states, and so all we can do to represent the current state is draw a big circle or box around everything, and say, "I know I'm somewhere inside here."

Now that doesn't seem like it helps very much. What good is it to know that we don't really know anything at all? But the point is that we can search in the state space of belief states rather than in the state space of actual spaces. So we believe that we're in one of these eight states, and now when we execute an action, we're going to get to another belief state. Let's take a look at how that works.

Sensorless Vacuum Cleaner Problem

This is the belief state space for the sensor-less vacuum problem. So we started off here. We drew the circle around this belief state. So we don't know anything about where we are, but the amazing thing is, if we execute actions we can gain knowledge about the world even without sensing.

So let's say we move right, then we'll know we're in the right-hand location. Either we were in the left, and we moved right, and arrived there, or we were in the right to begin with, and we bumped against the wall and stayed there. So now we end up in this state. We now know more about the world. We're down to four possibilities rather than eight, even though we haven't observed anything.

And now note something interesting, that in the real world, the operations of going left and going right are inverses of each other, but in the belief state world going right and going left are not inverses. If we go right, and then we go left, we don't end up back where we were in a state of total uncertainty, rather going left takes us over here where we still know we're in one of four states rather than in one of eight states.

Note that it's possible to form a plan that reaches a goal without ever observing the world. Plans like that are called conform-it plans. For example, if the goal is to be in a clean location all we have to do is suck. So we go from one of these eight states to one of these four states and, in every one of those four, we're in a clean location. We don't know which of the four we're in, but we know we've achieved the goal.

It's also possible to arrive at a completely known state. For example, if we start here, we go left; we suck up the dirt there. We go right and suck up the dirt, now we're down to a belief state consisting of one single state that is we know exactly where we are.

Here's a question for you: how do I get from the state where I know my current square is clean, but know nothing else, to the belief state where I know that I'm in the right-hand side location and that that location is clean? What I want you to do is click on the sequence of actions, left, right or suck that will take u from that start to that goal.

And the answer is that the state of knowing that your current square is clean corresponds to this state. This belief state with four possible world states, and if I then execute the right action, followed by the suck action, then I end up in this belief state, and that satisfies the goal. I know I'm in the right-hand-side location and I know that location is clean.

Partially Observable Vacuum Cleaner Example

We've been considering sensor-less planning in a deterministic world. Now I want to turn our attention to partially observable planning but still in a deterministic world.

Suppose we have what's called local sensing, that is our vacuum can see what location it is in and it can see what's going on in the current location, that is whether there's dirt in the current location or not, but it can't see anything about whether there's dirt in any other location.

So here's a partial diagram of the -- part of the belief state from that world, and I want it to show how the belief state unfolds as two things happen. First, as we take action, so we start in this state, and we take the action of going right, and in this case we still go from two states in our belief state to two new ones, but then, after we do an action, we do an observation, and we have the act-percept cycle, and now, once we get the observation, we can split that world, we can split our belief state to say, if we observe that we're in location B and it's dirty, then we know we're in this belief state here, which happens to have exactly one world state in it, and if we observe that we're clean then we know that we're in this state, which also has exactly one in it.

Now what does the act-observe cycle do to the sizes of the belief states? Well in a deterministic world, each of the individual world states within a belief state maps into exactly one other one. That's what we mean by deterministic, and so that means the size of the belief state will either stay the same or it might decrease if two of the actions sort of accidentally end up bringing you to the same place.

On the other hand, the observation works in kind of the opposite way. When we observe the world, what we're doing is we're taking the current belief state and partitioning it up into pieces. Observations alone can't introduce a new state -- a new world state -- into the belief state. All they can do is say, "some of them go here, and some of them go here." Now it may be that for some observation all the belief states go into one bin, and so we make an observation and we don't learn anything new, but at least the observation can't make us more confused than we were before the observation.

Stochastic Environment Problem

Now let's move on to stochastic environments. Let's consider a robot that has slippery wheels so that sometimes when you make a movement -- a left or a right action -- the wheels slip and you stay in the same location. And sometimes they work and you arrive where you expected to go. And let's assume that the suck action always works perfectly.

Now we get a belief state space that looks something like this. And notice that the results of actions will often result in a belief state that's larger than it was before -- that is, the action will increase uncertainty because we don't know what the result of the action is going to be. And so here for each of the individual world states belonging to a belief state, we have multiple outcomes for the action, and that's what stochastic means. And so we end up with a larger belief state here.

But in terms of the observation, the same thing holds as in the deterministic world. The observation partitions the belief state into smaller belief states. So in a stochastic partially observable environment, the actions tend to increase uncertainty, and the observations tend to bring that uncertainty back down.

Now, how would we do planning in this type of environment? Well I haven't told you yet, so you won't know the answer for sure, but I want you to try to figure it out anyway, even if you might get the answer wrong. So imagine I had the whole belief state from which I've diagrammed just a little bit here and I wanted to know how to get from this belief state to one in which all squares are clean.

So I'm going to give you some possible plans, and I want you to tell me whether you think each of these plans will always work, or maybe sometimes work depending on how the stochasticity works out. And so here are the possible plans.

Remember I'm starting here, and I want to know how to get to a belief state in which all the squares are clean. One possibility is suck, right, and suck; one is right, suck, left, suck; one is suck, right, right, suck; and the other is suck, right, suck, right, suck. So some of these actions might take you out this this little belief state here, but just use what you knew from the previous definition of the state space and the results of each of those actions and the fact that the right and left actions are nondeterministic and tell me which of these you think will always achieve the goal or will maybe achieve the goal.

And then I want you to also answer for the fill-in-the-blank plan -- that is, is there some plan, some ideal plan, which always or maybe achieves the goal?

And the answer is that any plan that would work in the deterministic world might work in the stochastic world if everything works out okay and all of these plans meet that criteria. But no finite plan is guaranteed to always work because a successful plan has to include at least one move action. And if we try a move action a finite number of times, each of those times the wheels might slip and it won't move, and so we can never be guaranteed to achieve the goal with a finite sequence of actions. Now, what about an infinite sequence of actions? Well, we can't represent that in the language we have so far where a plan is a linear sequence. But we can introduce a new notion of plans in which we do have infinite sequences.

Infinite Sequences

In this new notation, instead of writing plans as a linear sequence of, say, suck, move right, and suck; I'm going to write them as a tree structure. So we start off in this belief state here, which we'll diagram like this. And then we do a suck action. We end up in a new state. And then we do a right action, and now we have to observe the world, and if we observe that we're still in state A, we loop back to this part of the plan. And if we observe that we're in B, we go on and then execute the suck action. And now we're at the end of the plan.

So, we see that there's a choice point here, which we indicate with this sort of tie to say we're following a straight line, but now we can branch. There's a conditional, and we can either loop, or we can continue on, so we see that this finite representation represents an infinite sequence of plans. And we could write it in a more sort of linear notation as S, while we observe A do R, and then do S.

Now, what can we say about this plan? Does this plan achieve the goal? Well, what we can say is that if the stochasticity is independent, that is, if sometimes it works and sometimes it doesn't, then with probability 1 in the limit, this plan will, in fact, achieve the goal, but we can't state any bounded number of steps under which it's guaranteed to achieve the goal. We can only say that it's guaranteed at infinity.

Finding a Successful Plan

Now, I've told you what a successful plan looks like, but I haven't told you how to find one. The process of finding it can be done through search just as we did in problem solving.

So remember in problem solving we start off in a state, and it's a single state, not a belief state. And then we start searching a tree, and we have a big triangle of possible states that we search through, and then we find one path that gets us all the way to a goal state. And we pick from this big tree a single path.

So, with belief states and with branching plan structures, we do the same sort of process, only the tree is just a little bit more complicated. Here we show one of these trees, and it has different possibilities. So, for example, we start off here, and we have one possibility that the first action will be going right, or another possibility that the first action will be performing a suck. But then it also has branches that are part of the plan itself. So this branch here is actually part of the plan as we saw before. It's not a branch in the search space. It's a branch in the plan, so what we do is we search through this tree. We try right as a first action. We keep expanding nodes until we find a portion of the tree like this path is a portion of this search tree. We find that portion which is a successful plan according to the criteria of reaching the goal.

Finding a Successful Plan Question

So let's say we performed that search. We had a big search tree, and then we threw out all the branches except one, and this branch of the search tree does itself have branches, but this branch of the search tree through the belief state represents a single plan, not multiple possible plans.

Now, what I want to know is, for this single plan, what can we guarantee about it? So, say we wanted to know is this plan guaranteed to find the goal in an unbounded number of steps? And what do we need to guarantee that? So it's an unbounded solution. So we need to guarantee that some leaf node is a goal? So, for example, here's a plan that goes through, and at the bottom there's a leaf node.

Now, if this were in problem solving, then remember it would be a sequence of steps with no branches in it, and we know it's a solution if the one leaf node is a goal. But for these with branches, do we need to guarantee that some leaf is a goal, or do we need to guarantee that every leaf is a goal, or is there no possible guarantee that will mean that for sure we've got a solution, although the solution may be of unbounded length?

Then I also want you to answer what does it take to guarantee that we have a bounded solution? That is, a solution that is guaranteed to reach the goal in a bounded, finite number of steps. Do we need to have a plan that has no branches in it, like this branch? Or a plan that has no loops in it, like this loop that goes back to a previous state? Or is there no guarantee that we have a bounded solution?

And the answer is we have an unbounded solution if every leaf in the plan ends up in a goal. So if we follow through the plan, no matter what path we execute based on the observations -- and remember, we don't get to pick the observations. The observations come in to us, and we follow one path or another based on what we observe. So we can't guide it in one direction or another, and so we need every possible leaf node. This one only has one, but if a plan had multiple leaf nodes, every one of them would have to be a goal.

Now, in terms of a bounded solution, it's OK to have branches but not to have loops. If we had branches and we ended up with one goal here and one goal here in 1, 2, 3, steps, 1, 2, 3, steps, that would be a bounded solution. But if we have a loop, we might be 1, 2, 3, 4, 5 -- we don't know how many steps it's going to take.

Problem Solving via Mathematical Notation

Now some people like manipulating trees and some people like a more -- sort of formal -- mathematical notation. So if you're one of those, I'm going to give you another way to think about whether or not we have a solution; and let's start with a problem-solving where a plan consists of a straight line sequence.

We said one way to decide if this is a plan that satisfies the goal is to say, "Is the end state a goal state?" If we want to be more formal and write that out mathematically, what we can say is -- what this plan represents is -- we started in the start state, and then we transitioned to the state that as the result of applying the action of going from A to S, to that start state; and then we applied to that, the result of starting in that intermediate state and applying the action of going from S to F. And if that resulting state is an element of the set of goals, then this plan is valid; this plan gives us a solution.

And so that's a mathematical formulation of what it means for this plan to be a goal. Now, in stochastic partially observable worlds, the equations are a little bit more complicated. Instead of just having S' is a result of applying some action to the initial state, we're dealing with belief states, rather than individual states. And what we say is our new belief state is the result of updating what we get from predicting what our action will do; and then updating it, based on our observation, O, of the world.

So the prediction step is when we start off in a belief state, we look at the action, we look at each possible result of the action -- because they're stochastic -- to each possible member of the belief state, and so that gives us a larger belief state; and then we update that belief state by taking account of the observation -- and that will give us a smaller -- or same size -- belief state. And now, that gives us the new state. Now, we can use this predict and update cycle to keep track of where we are in a belief state.

Tracking the Predict Update Cycle

Here's an example of tracking the Predict Update Cycle. And this is in a world in which the actions are guaranteed to work as advertised -- that is, if you suck to clean up the current location, and if you move right or left, the wheels actually turn, and you do move. But we can call this the kindergarten world because there are little toddlers walking around who can deposit dirt in any location, at any time.

So if we start off in this state, and execute the suck action, we can predict that we'll end up in one of these two states. Then, if we have an observation -- well, we know what the observation's going to be because we know the suck action always works, and we know were were in A; so the only observation we can get is that we're in A -- and that it's clean -- so we end up in that same belief state. And then if we execute the right action -- well then lots of things could happen; because we move right and somebody might have dropped dirt in the right location, and somebody might have dropped dirt in the left location -- or maybe not.

So we end up with four possibilities, and then we can update again when we get the next observation -- say, if we observed that we're in B and it's dirty, then we end up in this belief state. And we can keep on going -- specifying new belief states -- as a result of success of predicts and updates.

Now, this Predict Update Cycle gives us a kind of calculus of belief states that can tell us, really, everything we need to know. But there is one weakness with this approach -- that, as you can see here, some of the belief states start to get large; and this is a tiny little world. Already, we have a belief state with four world states in it. We could have one with 8, 16, 1024 -- or whatever. And it seems that there may be more succinct representations of a belief state, rather than to just list all the world states.

For example, take this one here. If we had divided the world up -- not into individual world states, but into variables describing that state, then this whole belief state could be represented just by: vacuum is on the right. So the whole world could be represented by three states -- or three variables: one, where is the vacuum -- is it on the right, or not? Secondly, is there dirt in the left location? And third, is there dirt in the right location? And we could have some formula, over those variables, to describe states. And with that type of formulation, some very large states -- in terms of enumerating the world states -- can be made small, in terms of the description.

Classical Planning 1

Now I want to describe a notation which we call classical planning, which is a representation language for dealing with states and actions and plans, and it's also an approach for dealing with the problem of complexity by factoring the world into variables.

So under classical planning, a state space consists of all the possible assignments to k boolean variables. So that means there'll be 2k states in that state space. And if we think about the two location vacuum world, we would have three boolean variables. We could have dirt in location A, dirt in location B, and vacuum in location A. The vacuum has to be in either A or B. So these three variables will do, and there will be eight possible states in that world, but they can be succinctly represented through the three variables. And then a world state consists of a complete assignment of true or false through each of the three variables.

And then a belief state... well, just as in problem solving, the belief state depends on what type of environment you want to deal with. Now in the core classical planning, the belief state had to be a complete assignment, and that was useful for dealing with deterministic fully observable domains. But we can easily extend classical planning, and we can deal with belief states that are partial assignments -- that is, some of the variables have values and others don't. So we could have the belief state consisting of vacuum in A is true and the others are unknown, and that small formula represents four possible world states. And we can even have a belief state which is an arbitrary formula in Boolean logic, and that can represent anything we want.

So that's what states look like. Now we have to figure out what actions look like and what the results of those actions look like. And these are represented in classical planning by something called an action schema. It's called a schema because it represents many possible actions that are similar to each other. So let's take an example of we want to send cargo around the world, and we've got a bunch of planes in airports, and we have cargo and so on. I'll show you the action for having a plane fly from one location to another.

Here's one possible representation. We say it's an action schema, so we write the word Action and then we write the action operator and its arguments, so it's a Fly of P from X to Y. And then we list the preconditions, what needs to be true in order to be able to execute this action. We can say something like P better be a plane. It's no good trying to fly a truck or a submarine. And we'll use the And formula from Boolean propositional logic. And X better be an airport. We don't want to try to take off from my backyard. And similarly, Y better be an airport. And, most importantly, P better be at airport X in order to take off from there.

And then we represent the effects of the action by saying, well, what's going to happen? Well once we fly from X to Y, the plane is no longer at X, so we say not At(p, x) -- the plane is no longer at X -- and the plane is now at Y.

So this is called an action schema. It represents a set of actions for all possible planes, for all X and for all Y, represents all of those actions in one schema that says what we need to know in order to apply the action and it says what will happen. In terms of the transition from state spaces, this variable will become false and this one will become true.

Now when we look at this formula, this looks like a term in first order logic, but we're actually dealing with a completely propositional world. It just looks like that because this is a schema. We can apply this schema to specific ground states, specific world states, and then P and X would have specific values, and you can just think of it as concatenating their names all together, and that's just the name of one variable. The name just happens to have this complex form with parentheses and commas in it to make it easier to write one schema that covers all the individual fly actions.

Classical Planning 2

Here we see a more complete representation of a problem solving domain in the language of classical planning. Here's the fly action schema. I've made it a little bit more explicit with from and to airports rather than X or Y.

We want to deal with transporting cargo. So in addition to flying, we have an operator to load cargo, C, onto a plane, P, at airport A -- and you can see the preconditions and effects there -- and an action to unload the cargo from the plane with preconditions and effects.

We have a representation of the initial state. There's two pieces of cargo, there's two planes and two airports. This representation is rich enough and the algorithms on it are good enough that we could have hundreds or thousands of cargo planes and so on representing millions of ground actions. If we had 10 airports and 100 planes, that would be 100, 1,000, 10,000 different Fly actions. And if we had thousands of pieces of cargo, there would be even more load and unload actions, but they can all be represented by the succinct schema.

So the initial state tells us what's what, where everything is, and then we can represent the goal state: that we want to have this piece of cargo to be delivered to this airport, and another piece of cargo has to be delivered to this airport. So now we know what actions and problems of initial and goal states looks like in this representation, but how do we do planning using this?

Progression Search

The simplest way to do planning is really the exact same way that we did it in problem solving. That we start off in an initial state. So P1 was at SFO, say, and cargo, C1, was also at SFO, and all the other things that were in that initial state.

And then we start branching on the possible actions, so say one possible action would be to load the cargo, C1, onto the plane, P1, at SFO, and then that would bring us to another state which would have a different set of state variables set, and we'd continue branching out like that until we hit a state which satisfied the goal predicate.

So we call that forward or progression state space search in that we're searching through the space of exact states. Each of these is an individual world state, and if the actions are deterministic, then it's the same thing as we had before.

But, because we have this representation, there are other possibilities that weren't available to us before.

Regression Search

Another way to search is called backwards or regression search in which we start at the goal. So we take the description of the goal state. C1 is at JFK and C2 is at SFO, so that's the goal state. And notice that that's the complete goal state. It's not that I left out all the other facts about the state; it's that that's all that's known about the state is that these two propositions are true and all the others can be anything you want.

And now we can start searching backwards. We can say what actions would lead to that state? Now, remember, in problem solving we did have that option of searching backwards, if there was a single goal state, we could say what other arcs are coming into that goal state. But here, this goal state doesn't represent a single state; it represents a whole family of states with different values for all the other variables.

And so we can't just look at that, but what we can do is look at the definition of possible actions that will result in this goal. So let's look at it one at a time. Let's first look at what actions could result At(C1, JFK).

Well we look at our action schema, and there's only one action schema that adds an At, and that would be the Unload schema. So Unload of C, P, A adds C, A. And so what we would know is if we want to achieve this, then we would have to do an Unload where the C variable would have to be C1, the P variable is still unknown -- it could be any plane -- and the A variable has to be JFK.

So notice what we've done here. We have this representation in terms of logical formula that allows us to specify a goal as a set of many world states, and we also can use that same representation to represent an arrow here not as a single action but as a set of possible actions.

So this is representing all possible actions for any plane, P, of unloading cargo at the destination. And then we can regress this state over this operator and now we have another representation of this state here. But just as this state was uncertain -- not all the variables were known -- this state too will be uncertain.

For example, we won't know anything about what plane, P, is involved, and now we continue searching backwards until we get to a state where enough of the variables are filled in and where we match against the initial state. And then we have our solution. We found it going backwards, but we can apply the solution going forwards.

Regression vs Progression

Let's show an example of where a backwards search makes sense. I'm going to describe a world in which there is one action, the action of buying a book. And the precondition is we have to know which book it is, and let's identify them by ISBN number.

So we can buy ISBN number B, and the effect is that we own B. And probably there should be something about money, but we're going to leave that out for now to make it simple. And then the goal would be to own ISBN number 0136042597.

Now, if we try to solve this problem with forward search, we'd start in the initial state. Let's say the initial state is we don't own anything. And then we'd think about what actions can we apply? Well if there are ten million different books, ten million ISBN numbers, then there's a branching factor of ten million coming out of this node, and we'd have to try them all in order until we happened to hit upon one that was the right one. It seems very inefficient.

If we go in the backward direction, then we start at the goal. The goal is to own this number. Then we look at our available actions, and out of the ten million actions there's only one action schema, and that action schema can match the goal in exactly one way, when B equals this number, and therefore we know the action is to buy this number, and we can connect the goal to the initial state in the backwards direction in just one step. So that's the advantage of doing backwards or regression search rather than forward search.

Plan Space Search

Now there's one more type of search for plans that we can do with the classical planning language that we couldn't do before, and this is searching through the space of plans rather than searching through the space of states.

In forward search we were searching through concrete world states. In backward search we were searching through abstract states in which some of the variables were unspecified. But in plan space search we search through the space of plans.

And here's how it works. We start off with an empty plan. We have the start state and the goal state, and that's all we know about the plan. So obviously, this plan is flawed. It doesn't lead us from the start to the goal. And then we say let's do an operation to edit or modify that plan by adding something in new.

And here we're tackling the problem of how to get dressed and put on all the clothes in the right order, so we say out of all the operators we have, we could add one of those operators into the plan. And so here we say what if we added the put on right shoe operator. Then we end up with this plan. That still doesn't solve the problem, so we need to keep refining that plan.

Then we come here and say maybe we could add in the put on the left shoe operator. And here I've shown the plan as a parallel branching structure rather than just as a sequence. And that's a useful thing to do because it captures the fact that these can be done in either order.

And we keep refining like that, adding on new branches or new operators into the plan until we got a plan that was guaranteed to work.

This approach was popular in the 1980s, but it's faded from popularity. Right now the most popular approaches have to do with forward search. We saw some of the advantages of backward search. The advantage of forward search seems to be that we can come up with very good heuristics.

So we can do heuristic search, and we saw how important it was to have good heuristics to do heuristic search. And because the forward search deals with concrete plan states, it seems to be easier to come up with good heuristics.

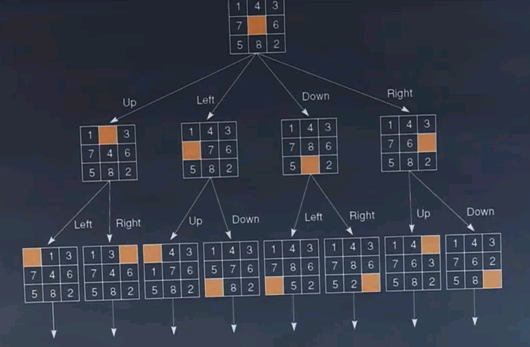

Sliding Puzzle Example

To understand the idea of heuristics, let's talk about another domain. Here we have the sliding puzzle domain. Remember we can slide around these little tiles and we try to reach a goal state. A 15 puzzle is kind of big, so let's how you the state space for the smaller 8 puzzle. Here's just a small portion of it.

Now let's figure out what the action schema looks like for this puzzle. So we only need to describe one action, which is to slide a tile, T, from location A to location B. The precondition: the tile has to be on location A and has to be a tile and B has to be blank and A and B have to be adjacent. That should be an And sign, not an A. So that's the action schema. Oops. I forgot we need an effect, which should be that the tile is now on B and the blank is now on A and the tile is no longer on A and the blank is no longer on B.

Now we talked before about how a human analyst could examine a problem and come up with heuristics and encode those heuristics as a function that would help search do a better job. But with this kind of a formal representation we can automatically come up with good representations of heuristics.

So for example, if we came up with a relaxed problem by automatically going in an throwing out some of the prerequisites -- if you throw out a prerequisite, you make the problem strictly easier -- then you get a new heuristic. So for example, if we crossed out the requirement that B has to be blank, then we end up with the Manhattan or city block heuristic. And if we also throw out the requirement that A and B have to be adjacent, then we get the number of misplaced tiles heuristic. So that means we could slide a tile from any A to any B, no matter how far apart they were. That's the number of misplaced tiles.

Other heuristics are possible. For example, one popular thing is to ignore negative effects, so say let's not say that this takes away the blank being in B. So if we ignore that negative effect, we make the whole problem strictly easier. We'd have a relaxed problem, and that might end up being a good heuristic.

So because we have our actions encoded in this logical form, we can automatically edit that form. A program can do that, and the program can come up with heuristics rather than requiring the human to come up with the heuristics.

Situation Calculus 1

Now I want to talk about one more representation for planning called situation calculus.

To motivate this, suppose we wanted to have the goal of moving all the cargo from airport A to airport B, regardless of how many pieces of cargo there are. You can't express the notion of "all" in propositional languages like classical planning, but you can in first order logic.

There are several ways to use first order logic for planning. The best known is situation calculus. It's not a new kind of logic; rather, it's regular first order logic with a set of conventions for how to represent states and actions. I'll show you what the conventions are.

First, actions are represented as objects in first order logic, normally by functions. And so we would have a function like the function Fly of a plane and a From airport and a To airport which represents an object, which is the action.

Then we have situations, and situations are also objects in the logic, and they correspond not to states but rather to paths -- the paths of actions that we have in state space search. So if you arrive at what would be the same world state by two different sets of actions, those would be considered two different situations in situation calculus.

We describe the situations by objects, so we usually have an initial situation, often called S0, and then we have a function on situations called Result. So the result of a situation object and an action object is equal to another situation.

And now, instead of describing the actions that are applicable in a situation with a predicate Actions of S, situation calculus for some reason decided not to do that and instead we're going to talk about the actions that are possible in the state, and we're going to do that with a predicate.

We have a predicate Possible of A and S, is an action A possible in a state? Now there's a specific form for describing these predicates, and in general it has the form of some precondition of state S implies that it's possible to do action A in state S.

I'll show you the possibility axiom for the Fly action. We would say if there is some P, which is a plane in state S, and there's some X, which is an airport in state S, and there's some Y, which is also an airport in state S, and P is at location X in state S, then that implies that it's possible to fly P from X to Y in state S. And that's known as the possibility axiom for the action Fly.

Situation Calculus 2

There's a convention in situation calculus that predicates like At -- we said plane P was at airport X in situation S -- these types of predicates that can vary from one situation to another are called fluents, from the word fluent, having to do with fluidity or change over time. And the convention is that they refer to a specific situation, and we always put that situation argument as the last in the predicate.

Now, the trickiest part about situation calculus is describing what changes and what doesn't change as a result of an action. Remember in classical planning we had action schemas where we described one action at a time and said what changed. For situation calculus it turns out to be easier to do it the other way around.

Instead of writing one action or one schema or one axiom for each action, we do one for each fluent, for each predicate that can change. And we use the convention called successor state axioms. These are used to describe what happens in the state that's a successor of executing an action.

And in general, a successor state axiom will have the form of saying for all actions and states, if it's possible to execute action A in state S, then -- and I'll show in general what they look like here -- the fluent is true if and only if action A made it true or action A didn't undo it.

So that is, either it wasn't true before and A made it be true, or it was true before and A didn't do something to stop it being true. For example, I'll show you the successor state axiom for the In predicate. And just to make it a little bit simpler, I'll leave out all the For All quantifiers. So wherever you see a variable without a quantifier, assume that there's a For All.

And what we'll say is it's possible to execute A in situation S. If that's true, then the In predicate holds between some cargo C and some plane in the state, which is the result of executing action A in state S. So that In predicate will hold if and only if either A was a load action -- so if we load the cargo into the plane, then the result of executing that action A is that the cargo is in the plane -- or it might be that it was already true that the cargo was in the plane in situation S and A is not equal to an unload action. So for all A and S for which it's possible to execute A in situation S, the In predicate holds if and only if the action was a load or the In predicate used to hold in the previous state and the action is not an unload.

Situation Calculus 3

So I've talked about the possibility axioms and the successor state axioms. That's most of what's in situation calculus, and that's used to describe an entire domain like the airport cargo domain.

And now we describe a particular problem within that domain by describing the initial state. Typically we call that S0, the initial situation. And in S0 we can make various types of assertions of different types of predicates. So we could say that plane P1 is at airport JFK in S0, so just a simple predicate. And we could also make larger sentences, so we could say for all C, if C is cargo, then that C is at JFK in situation S0.

So we have much more flexibility in situation calculus to say almost anything we want. Anything that's a valid sentence in first order logic can be asserted about the initial state.

The goal state is similar. We could have a goal of saying there exists some goal state S such that for all C, if C is cargo, then we want that cargo to be at SFO in state S. So this initial state and this goal says move all the cargo -- I don't care how much there is -- from JFK to SFO.

Now the great thing about situation calculus is that once we've described this in the ordinary language of first order logic, we don't need any special programs to manipulate it and come up with the solution because we already have theorem provers for first order logic and we can just state this as a problem, apply the normal theorem prover that we already had for other uses, and it can come up with an answer of a path that satisfies this goal, a situation which corresponds to a path which satisfies this given the initial state and given the descriptions of the actions.

So the advantage of situation calculus is that we have the full power of first order logic. We can represent anything we want. Much more flexibility than in problem solving or classical planning.

So all together now, we've seen several ways of dealing with planning. We started in deterministic fully observable environments and we moved into stochastic and partially observable environments. We were able to distinguish between plans that can or cannot solve a problem, but we had one weakness in all these different approaches. It is that we weren't able to distinguish between probable and improbable solutions. And that will be the subject of the next unit.