AI class unit 7

These are my notes for unit 7 of the AI class.

Representation with Logic

Introduction

Welcome back. So far we've talked about AI as managing complexity and uncertainty. We've seen how search can discover sequences of actions to solve problems. We've seen how probability theory can represent and reason with uncertainty. And we've seen how machine learning can be used to learn and improve.

AI is a big and dynamic field because we're pushing against complexity in at least three directions. First, in terms of agent design, we start with a simple reflex-based agent and move into goal-based and utility-based agents. Secondly, in terms of the complexity of the environment, we start with simple environments and then start looking at partial observability, at stochastic actions, at multiple agents, and so on. And finally, in terms of representation, the agent's model of the the world becomes increasingly complex.

In this unit we'll concentrate on that third aspect of representation, showing how the tools of logic can be used by an agent to better model the world.

Propositional Logic

The first logic we will consider is called propositional logic. Let's jump right into an example, recasting the alarm problem in propositional logic. We have propositional symbols B, E, A, M and J corresponding to the events of a burglary occurring, of the earthquake occurring, of the alarm going off, of Mary calling, and of John calling. And just as in the probabilistic models, these can be either true or false, but unlike in probability, our degree of belief in propositional logic is not a number. Rather, our belief is that each of these is either true, false, or unknown. Now, we can make logical sentences using these symbols and also using the logical constants true and false by combining them together using logical operators. For example, we can say that the alarm is true whenever the earthquake or burglary is true with this sentence:

So that says whenever the earthquake or the burglary is true, then the alarm will be true. We use this V symbol to mean "or" and a right arrow to mean "implies".

We could also say that it would be true that both John and Mary call when the alarm is true. We write that as:

And we use this symbol to indicate an "and". So this upward-facing wedge looks kind of like an "A" with the crossbar missing, and so you can remember "A" is for "and" where this downward-facing V symbol is the opposite of "and", so that's the symbol for "or".

Now, there's two more connectors we haven't seen yet. There's a double arrow for equivalent, also known as a biconditional, and a not sign for negation. So we could say if we wanted to that John calls if and only if Mary calls. We would write that as:

John is equivalent to Mary -- when on is true, the other is true; when one is false, the other is false. Or we could say that when John calls, Mary doesn't, and vice versa. We could write that as:

And this is the "not" sign.

Now, how do we know what the sentences mean? A propositional logic sentence is either true or false with respect to a model of the world. Now a model is just a set of true/false values for all the propositional symbols, so a model might be the set B is true, E is false, and so on. We can define the truth value of the sentence in terms of the truth of the the symbols with respect to the models using truth tables.

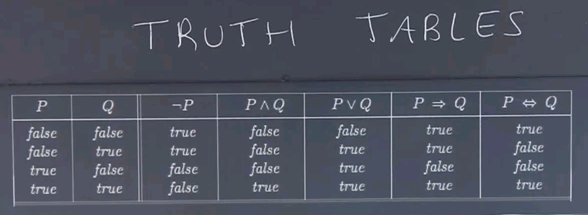

Truth Tables

Here are the truth tables for all the logical connectives.

What a truth table does is list all the possibilities for the propositional symbols, so P and Q can be false and false, false and true, true and false, or true and true. Those are the only four possibilities, and then for each of those possibilities, the truth table lists the truth value of the compound sentence. So the sentence not P is true when P is false and false when P is true. The sentence P and Q is true only when both P and Q are true and false otherwise. The sentence P or Q is true when P or Q is true and false when both are false.

Now, so far, those mostly correspond to the English meaning of those sentences, with one exception, which is that in English, the word "or" is somewhat ambiguous between the inclusive and exclusive or, and this "or" means either or both.

We translate this mark into English P implies Q; or as: if P, then Q; but the meaning in logic is not quite the same as the meaning in ordinary English. The meaning in logic is defined explicitly by this truth table and by nothing else, but let's look at some examples in ordinary English.

If we have the proposition O and have that mean five is an odd number and P meaning Paris is the capital for France, then under the ordinary model of the truth in the real world, what could we say about the sentence ? That is, five is an odd number implies Paris is the capital of France. Would that be true or false? And let's look at one more example? If E is the proposition that five is an even number and M is the proposition that Moscow is the capital of France, what about ? Five is an even number implies Moscow is the capital of France. Is that true or false?

Note: For the question, please be sure to use the rules of logic, not ordinary English!

The answers are first, the sentence if five is an odd number, then Paris is the capital of France, is true in propositional logic. It may sound odd in ordinary English, but in propositional logic, this is the same as true implies true and if we look on this line -- the final line for P and Q, P implies Q is true.a

The second sentence: five is an even number implies Moscow is the capital of France. That's the same as false implies false, and false implies false according to the definition is also true.

Question

Here's a quiz. Use truth tables or whatever other method you want to fill in the values of these tables. For each of the values of P and Q -- false/false, false/true, true/false, or true/true -- look at each of these boxes and click on just the boxes in which the formula for that column will be true. So for which of these four boxes, if any, will the formula be true? And this formula, and this formula.

I solved that with this javascript code:

function print( line ) { WScript.StdOut.WriteLine( line ); }

function implies( p, q ) {

if ( p === true && q === false ) { return false; }

return true;

}

function test( p, q ) {

var a = p && implies( p, q );

var b = ! ( ! p || ! q );

var c = a === b;

var line = p + "\t" + q + "\t" + a + "\t" + b + "\t" + c;

print( line );

}

print( "P\tQ\tA\tB\tC" );

test( false, false );

test( false, true );

test( true, false );

test( true, true );

Here are the answers. For P and P implies Q, we know that P is true in these bottom two cases, and P implies Q, we saw the truth table for P implies Q, is true in the first, second, and fourth case. So the only case that's true for both P and P implies Q is the forth case.

Now, this formula, not the quantity, not P or not Q, we can work that out to be the same as P and Q, we know that P and Q is true only when both are true, so that would be true only in the fourth case and none of the other cases.

And now, we're asking for an equivalent or biconditional between these two cases. Is this one the same as this one? And we see that it is the same because they match up in all four cases. They're false for each of the first three and true in the fourth one, so that means that this is going to be true no matter what. They're always equivalent, either both false or both true, and so we should check all four boxes.

Question

Here's one more example of reasoning in propositional logic. In a particular model of the world, we know the following three sentences are true. E or B implies A. A implies J and M. And B.

We know those three sentences to be true, and that's all we know. Now, I want you to tell me for each of the five propositional symbols, is that symbol true or false or unknown in this model? And tell me for the symbols E, B, A, J and M.

The answer is that B is true. And we know that because it was one of the three sentences that was given to us. And now, according to the first sentence, which says that if E or B is true then A is true. So now we know that A is true. And the second sentence says if A is true then J and M are true. What about E? That wasn't mentioned. Does that mean E is false? No. It means that it's unknown. That a model where E is true and a model where E is false would both satisfy these three sentences. So we mark E as unknown.

Terminology

Now for a little more terminology. We say that a valid sentence is one that is true in every possible model, for every combination of values of the propositional symbols. And a satisfiable sentence is one that is true in some models, but not necessarily in all the models. So what I want you to do is tell me for each of these sentences, whether it is valid, satisfiable but not valid, or unsatisfiable, in other words, false for all models. And the sentences are:

I cheated and used the following code:

function print( line ) { WScript.StdOut.WriteLine( line ); }

function implies( p, q ) {

if ( p === true && q === false ) { return false; }

return true;

}

var p1 = [ [ false ], [ true ] ];

var p2 = [ [ false, false ], [ false, true ], [ true, false ], [ true, true ] ];

var p3 = [

[ false, false, false ],

[ false, false, true ],

[ false, true , false ],

[ false, true , true ],

[ true , false, false ],

[ true , false, true ],

[ true , true , false ],

[ true , true , true ]

];

function a( p ) { p = p[ 0 ]; return p || ! p; }

function b( p ) { p = p[ 0 ]; return p && ! p; }

function c( p ) { var q = p[ 1 ]; p = p[ 0 ]; return p || q || p === q; }

function d( p ) { var q = p[ 1 ]; p = p[ 0 ]; return implies( p, q ) || implies( q, p ); }

function e( p ) {

var food = p[ 0 ];

var party = p[ 1 ];

var drinks = p[ 2 ];

return implies( implies( food, party ) || implies( drinks, party ), implies( food && drinks, party ) );

}

function test( l, f, p ) {

var count = 0;

for ( var i in p ) {

if ( f( p[ i ] ) ) { count++; }

}

if ( count === 0 ) {

print( l + ": unsatisfiable" );

}

else if ( count === p.length ) {

print( l + ": valid (" + count + " out of " + p.length + ")" );

}

else {

print( l + ": satisfiable (" + count + " out of " + p.length + ")" );

}

}

test( "a", a, p1 );

test( "b", b, p1 );

test( "c", c, p2 );

test( "d", d, p2 );

test( "e", e, p3 );

The answers are P and not P is valid. That is, it's true when P is true because of this, and it's true when P is false because of this clause.

P and not P is unsatisfiable. A symbol can't be both true and false at the same time.

P or Q or P is equivalent to Q is valid. So we know that it's true when either P or Q is true, so that's three out of the four cases. In the fourth case, both P and Q are false, and that means P is equivalent to Q. And therefore, in all four cases, it's true.

P implies Q or Q implies P, that's also valid. Now in ordinary English that wouldn't be valid. If the two clauses or the two symbols P and Q were irrelevant to each other we wouldn't say that either one of those was true. But in logic, one or the other must be true, according to the definitions of the truth tables.

And finally, this one's more complicated, if Food then Party or if Drinks then Party implies if Food and Drinks then Party. You can work it all out and both sides of the main implication work out to be equivalent to Not Food or Not Drinks or Party. So that's the same as saying P implies P, saying one side is equivalent to the other side. And if they're equivalent, then the implication relation holds.

Propositional Logic Limitations

Propositional logic. It's a powerful language for what it does. And there are very efficient inference mechanisms for determining validity and satisfiability, although we haven't discussed them. But propositional logic has a few limitations. First, it can only handle true and false values. No capability to handle uncertainty like we did in probability theory. And second, we can only talk about events that are true or false in the world. We can't talk about objects that have properties, such as size, weight, colour, and so on. Nor can we talk about the relations between objects. And third, there are no shortcuts to succinctly talk about a lot of different things happening. Say if we had a vacuum world with a thousand locations, and we wanted to say that every location is free of dirt. We would need a conjunction of a thousand propositions. There's no way to have a single sentence saying that all the locations are clean all at once. So, we will next cover first-order logic which addresses these two limitations.

First Order Logic

I'm going to talk about first order logic and its relation to the other logics we've seen so far -- namely, propositional logic and probability theory. And we're going to talk about them in terms of what they say about the world, which we call the ontological commitment of these logics, and what types of beliefs agents can have using these logics, which we call the epistemological commitments.

So in first order logic we have relations about things in the world, objects, and functions on those objects. And what we can believe about those relations is that they're true or false or unknown. So this is an extension of propositional logic in which all we had was facts about the world and we could believe that those facts were true or false or unknown.

Now in probability theory we had the same types of facts as in propositional logic -- the symbols, or variables -- but the beliefs could be a real number in the range 0 to 1. So logics vary both in what you can say about the world and what you can believe about what's been said about the world.

Another way to look at the representation is to break the world up into representations that are atomic, meaning that a representation of the state is just an individual state with no pieces inside of it. And that's what we used for search and problem solving. We had a state, like state A, and then we transitioned to another state, like state B, and all we could say about those states was are they identical to each other or not and maybe is one of them a goal state or not. But there wasn't any internal structure to those states.

Now, in propositional logic, as well as in probability theory, we break up the world into a set of facts that are true or false, so we call this a factored representation -- that is, the representation of an individual state of the world is factored into several variables -- the B and E and E and M and J, for example -- and those could be Boolean variables or in some types of representations -- not in propositional logic -- they can be other types of variables besides Boolean. Then the third type -- the most complex type of representation -- we call structured. And in a structured representation, an individual state is not just a set of values for variables, but it can include relationships between objects, a branching structure, and complex representations and relations between one object and another.

And that's what we see in traditional programming languages, it's what we see in databases -- they're called structured databases, and we have structured query languages over those databases -- and that's a more powerful representation, and that's what we get in first order logic.

Models

How does first order logic work? What does it do? Well, like propositional logic, we start with a model. In propositional logic a model was a value for each propositional symbol. So we might say that the symbol P was true and the symbol Q was false, and that would be a model that corresponds to what's going on in a possible world.

In first order logic the models are more complex. We start off with a set of objects. Here I've shown four together, these four tiles, but we could have more objects than that. We could say, for example, that the numbers 1, 2, and 3 were also objects in our model. So we have a set of objects. We can also have a set of constants that refer to those objects. So I could use the constant names A, B, C, D, 1, 2, 3. But I don't have to have a one-to-one correspondence between constants and objects. I could have two different constant names that refer to the same object. So I could also have, say, the name CEE that refers to this object, or I could have some of the objects that don't have any names at all.

But I've got a set of constants, and I also have a set of functions. And a function is defined as a mapping from objects to objects. And so, for example, I might have the NumberOf function that maps from a tile to the number on that tile, and that function then would be defined by the mapping from A to 1 and B to 3 and C to 3 and D to 2, and I could have other functions as well.

In addition to functions, I can have relations. So, for example, I could have the Above relation, and I could say in this model of the world the Above relation is a set of tuples. Say A is above B and C is above D. So that was a binary relation holding between two objects. Say one block is above another block. We can have other types of relations, for example, here's a unary relation -- Vowel -- and if we want to say the relation Vowel is true only of the object that we call A, then that's a set of tuples of length one that contains just A. We can even have relations over no objects. So say we wanted to have the relation Rainy, which doesn't refer to any objects at all, but just refers to the current situation. Then since it's not rainy today, we would represent that as the empty set. There's no tuples corresponding to that relation. Or, it if was rainy, we could say that it's represented by a singleton set, and since the arity of Rainy is zero, there would be zero elements in each one of those tuples.

So that's what a model in first order logic looks like.

Syntax

Now let's talk about the syntax of first order logic, and like in propositional logic, we have sentences which describe facts that are true or false. But unlike propositional logic, we also have terms which describe objects. Now, the atomic sentences are predicates corresponding to relations, so we can say Vowel(A) is an atomic sentence, or Above(A,B). And we also have a distinguished relation -- the equality relation. We can say 2 = 2 and the equality relation is always in every model, and sentences can be combined with all the operators from propositional logic, so that's and, or, not, implies, equivalent, and parentheses.

Now, terms, which refer to objects, can be constants, like A, B and 2. They can be variables. We normally use lowercase, like x and y. And they can be functions, like NumberOf(A), which is just another name or another expression that refers to the same object as 1, at least in the model that we showed previously.

And then there's one more type of complex sentence besides the sentences we get by combining operators, that makes first order logic unqiue, and these are the quantifiers. And there are two quantifiers, "for all", which we write with an upside-down A, followed by a variable that it introduces and "there exists", which we write with a backwards E followed by the variable that it introduces. So for example, we could say for all x, if x is a vowel, then the NumberOf(x) is equal to 1, and that's a valid sentence in first order logic. Or we could say there exists an x such that the NumberOf(x) is equal to 2, and this is saying that there's some object in the domain to which the NumberOf object applies and has a value of 2, but we're not saying what that object is.

Now another note is that sometimes as an abbreviation, we'll omit the quantifier, and when we do that, you can just assume that it means "for all"; that's left out just as a shortcut.

And I should say that these forms, or these sentences are typical, and you'll see these form over and over again, so typically, whenever we have a "for all" quantifier introduced, it tends to go with a conditional like Vowel(x) implies NumberOf(x) = 1, and the reason is because we usually don't want to say something about every object in the domain, since the objects can be so different, but rather we want to say something about a particular type of object, say, in this case, vowels.

And also, typically, when we have an exists an x, or an exists any variable, that typically goes with just a form like this, and not with a conditional, because we're talking about just one object that we want to describe.

Vacuum World

Now let's go back to the two-location vacuum world and represent it in first order logic. So first of all we can have locations. We can call the left location A and the right location B and the vacuum V, and the dirt -- say D1 and D2. And then we can have relations. The relation Loc, which is true of any location; Vacuum, which is true of the vacuum; Dirt, which is true of dirt; and At, which is true of an object and a location.

So if we wanted to say the vacuum is at location A, we just say At(V,A). If we want to say there's no dirt in any location, it's a little bit more complicated. We can say for all dirt and for all locations, if d is a dirt, and l is a location, then d is not at l. So that says there's no dirt in any location.

Now note, if there were thousands of locations instead of just two, this sentence would still hold, and that's really the power of first order logic. Let's keep going and try some more examples. If I want to say the vacuum is in a location with dirt without specifying what location it's in, I can do that. I can say there exists an l and there exists a d such that d is a dirt and l is a location and the vacuum is at the location and the dirt is at that same location, and that's the power of first order logic.

Now one final thing. You might ask what "first order" means. It means that the relations are on objects, but not on relations, and that would be called "higher order". In higher order logic we could, say, define the notion of a transitive relation talking about relations itself, and so we could say for all R, transitive of R is equivalent to for all a, b and c R of a, b and R of b, c implies R of a, c. So that would be a valid statement in higher order logic that would define the notion of a transitive relation, but this would be invalid in first order logic.

Question

Now let's get some practice in first order logic. I'm going to give you some sentences, and for each one, I want you to tell me if it is valid -- that is, always is true -- satisfiable, but not valid; that is, there's some models for which it is true; or unsatisfiable, meaning there are no models for which it is true. And the first sentence is, there exists an x and a y such that x equals y. Second sentence: there exists an x such that x = x, implies for all y there exists a z such that y = z. Third sentence: for all x, p of x or not p of x. And fourth: there exists an x, P of x.

The answers are the first sentence is valid. It's always true. Why is that? Because every model has to have at least one object and we can have both x and y refer to that same object and so that object must be equal to itself.

Second, let's see. The left-hand side of this implication has to be true. x is always equal to x, and the right-hand side says for every y, does there exist a z such that y equals z? And we can say yes, there is. We can always choose y itself for the value of z, and then y equals y, so true implies true. That's always true. Valid.

Third sentence: for all x, P of x or not P of x, and that's always true, because everything has to be either in the relation for P or out of the relation for P, so that's valid.

And the fourth: there exists an x, P of x, and that's true for the models in which there is some x that is a member of P, but it doesn't necessarily have to be any at all, P might be an empty relation, so this is satisfiable. True in some models, but not true in all models.

Question

Now I'm going to give you some sentences or axioms in first order logic, and I want you to tell me if they correctly or incorrectly represent the English that I'm asking about. So tell me yes or no, are these good representations?

And the first, I want to represent the English sentence "Sam has 2 jobs", and the first order logic sentence is there exists an x and a y such that Job(Sam,x) and Job(Sam,y) and not x=y. And so tell me yes, that correctly represents Sam has two jobs, or no, there's a problem.

And secondly, I want to represent the idea of set membership. Now, assume I've already defined the notion of adding an element to a set. Can I define set membership with these two axioms? For all x and s, x is a member of the result of adding x to any set x, and for all x and x, x is a member of s implies that for all y, x is a member of the set that you get when you add y to s.

And third, I'm going to try to define the notion of adjacent squares on, say, a checkerboard, where the squares are numbers with x and y coordinates and we want to just talk about adjacency in the horizontal and vertical direction. Can I define that as follows? For all x and y, the square x, y is adjacent to the square +(x,1), y and the square(x,y) is adjacent to the adjacent to the square(x, +(y,1)) and assume that we've defined the notion of + somewhere and the character set allows + to occur as the character for a function. Tell me yes or no, is that a good representation of the notion of adjacency?

The first answer is yes, this is a good representation of the sentence "Sam has two jobs". It says there exists an x and y, and one of them is a job of Sam and the other one is a job of Sam, and crucially, we have to say that x is not equal to y. Otherwise, this would be satisfied and we could have the same job represented by the variables x and y.

Is this a good representation of the member function? No. It does do a good job of telling you what is a member, so if x is a member of a set because it's one member and then we can always add other members and it's still a member of that set, but it doesn't tell you anything about what x is not a member of. So for example, we want to know that 3 is not a member of the empty set, but we can't prove that with what we have here.

And we have a similar problem down here. This is not a good representation of adjacent relation. So it will tell you, for example, that square(1,1) is adjacent to square(2,1) and also to square(1,2), so it's doing something right, but one problem is that it doesn't tell you in the other direction. It doesn't tell you that (2,1) is adjacent to (1,1) and another problem is that it doesn't tell you that (1,1) is not adjacent to (8,9) because again, there's no way to prove the negative. And the moral is that when you're trying to do a definition, like adjacent, or member, what you usually want to do is have a sentence with the equivalent or the biconditional sign to say this is true if and only if rather than to just have an assertion or to have an implication in one direction.