AI class unit 5

These are my notes for unit 5 of the AI class.

Machine Learning

Introduction

{{#ev:youtubehd|8o1fAcyhap4}}

Welcome to the machine learning unit. Machine learning is a fascinating area. The world has become immeasurably data-rich. The world wide web has come up over the last decade. The human genome is being sequenced. Vast chemical databases, pharmaceutical databases, and financial databases are now available on a scale unthinkable even five years ago. To make sense out of the data, to extract information from the data, machine learning is the discipline to go.

Machine learning is an important sub-field of artificial intelligence, it's my personal favourite next to robotics because I believe it has a huge impact on society and is absolutely necessary as we move forward.

So in this class, I teach you some of the very basics of machine learning, and in our next unit Peter will tell you some more about machine learning. We'll talk about supervised learning, which is one side of machine learning, and Peter will tell you about unsupervised learning, which is a different style. Later in this class we will also encounter reinforcement learning, which is yet another style of machine learning. Anyhow, let's just dive in.

What is Machine Learning?

{{#ev:youtubehd|tEzGdI9nQt4}}

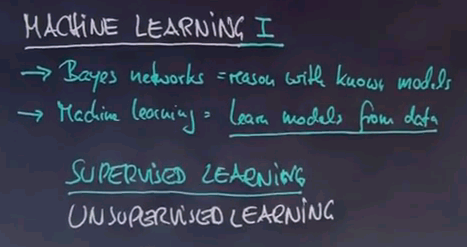

Welcome to the first class on machine learning. So far we talked a lot about Bayes Networks. And the way we talked about them is all about reasoning within Bayes Networks that are known. Machine learning addresses the problem of how to find those networks or other models based on data. Learning models from data is a major, major area of artificial intelligence and it's perhaps the one that had the most commercial success. In many commercial applications the models themselves are fitted based on data. For example, Google uses data to understand how to respond to each search query. Amazon uses data to understand how to place products on their website. And these machine learning techniques are the enabling techniques that make that possible.

So this class which is about supervised learning will go through some very basic methods for learning models from data, in particular, specific types of Bayes Networks. We will complement this with a class on unsupervised learning that will be taught next after this class.

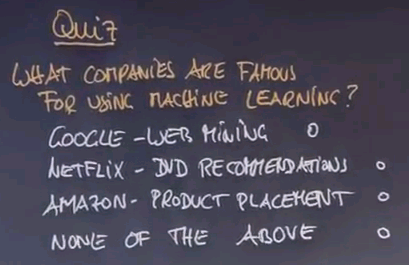

Let me start off with a quiz. The quiz is: What companies are famous for machine learning using data? Google for mining the web. Netflix for mining what people would like to rent on DVDs. Which is DVD recommendations. Amazon.com for product placement. Check any or all and if none of those apply check down here.

{{#ev:youtubehd|SnbvK3_ayWI}}

And, not surprisingly, the answer is all of those companies and many, many more use massive machine learning for making decisions that are really essential to their businesses. Google mines the web and uses machine learning for translation, as we've seen in the introductory level. Netflix has used machine learning extensively for understanding what type of DVD to recommend to you next. Amazon composes its entire product pages using machine learning by understanding how customers respond to different compositions and placements of their products, and many, many other examples exist. I would argue that in Silicon Valley, at least half the companies dealing with customers and online products do extensively use machine learning. So it makes machine learning a really exciting discipline.

Stanley DARPA Grand Challenge

{{#ev:youtubehd|Q1xFdQfq5Fk}}

In my own research, I've extensively used machine learning for robotics. What you see here is a robot my students and I built at Stanford called Stanley, and it won the DARPA Grand Challenge. It's a self-driving car that drives without any human assistance whatsoever, and this vehicle extensively uses machine learning.

The robot is equipped with a laser system. I will talk more about lasers in my robotics class, but here you can see how the robot is able to build 3D models of the terrain ahead. These are almost like video game models that allow it to make assessments where to drive and where not to drive. Essentially it's trying to drive on flat ground.

The problem with these lasers is that they don't see very far. They only see about 25 meters out, so to drive really fast the robot has to see further, and this is where machine learning comes into play. What you see here is camera images delivered by the robot superimposed with laser data that doesn't see very far, but the laser is good enough to extract samples of driveable road surface that can then be machine learned and extrapolated into the entire camera image. That enables the robot to use the camera to see driveable terrain all the way to the horizon up to like 200 meters out, enough to drive really, really fast. This ability to adapt its vision by driving its own training examples using lasers but seeing out 200 meters or more was a key factor in winning the race.

Taxonomy

{{#ev:youtubehd|m-hcAePIkWY}}

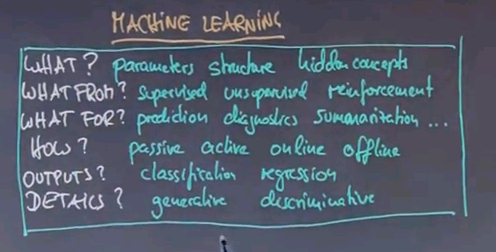

Machine learning is a very large field with many different methods and many different applications. I will now define some of the very basic terminology that is being used to distinguish different machine learning methods.

Let's start with the what. What is being learned? You can learn parameters like the probabilities of a Bayes Network. You can learn structure like the arc structure of a Bayes Network. And you might even discover hidden concepts. For example, you might find that certain training examples form a hidden group. For example, Netflix might find that there's different types of customers some that care about classic movies some of them care about modern movies and those might form hidden concepts whose discovery can really help you make better sense of the data.

Next is what from? Every machine learning method is driven by some sort of target information that you care about. In supervised learning which is the subject of today's class we're given specific target labels and I'll give you examples in just a second. We also talk about unsupervised learning where target labels are missing and we use replacement principles to find, for example hidden concepts. Later there will be a class on reinforcement learning when an agent learns from feedback with the physical environment by interacting and trying actions and receiving some sort of evaluation from the environment like "Well done" or "That works." Again, we will talk about those in detail later.

There's different things you could try to do with machine learning techniques. You might care about prediction. For example you might want to care about what's going to happen with the future in the stockmarket for example. You might care to diagnose something which is you get data and you wish to explain it and you use machine learning for that. Sometimes your objective is to summarise something. For example if you read a long article your machine learning method might aim to produce a short article that summarises the long article. And there's many, many, many more different things.

You can talk about the how to learn. We use the word passive if your learning agent is just an observer and has no impact on the data itself. Otherwise we call it active. Sometimes learning occurs online which means while the data is being generated and some of it is offline which means learning occurs after the data has been generated.

There's different types of outputs of a machine learning algorithm. Today we'll talk about classification versus regression. In classification the output is binary or a fixed number of classes for example something is either a chair or not. Regression is continuous. The temperature might be 66.5 degrees in our prediction.

And there's tons of internal details we will talk about. Just to name one. We will distinguish generative from discriminative. Generative seeks to model the data as generally as possible versus discriminative methods seek to distinguish data and this might sound like a superficial distinction but has enormous ramification on the learning algorithm.

Now to tell you the truth it took me many years to fully learn all these words here and I don't expect you to pick them all up in one class but you should as well know that they exist. And as they come up I'll emphasise them so you can resort any learning method I tell you back into the specific taxonomy over here.

Supervised Learning

{{#ev:youtubehd|nxX9Ihi4HZQ}}

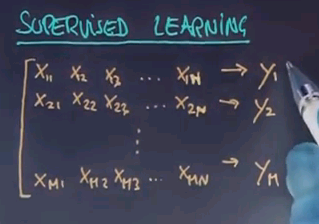

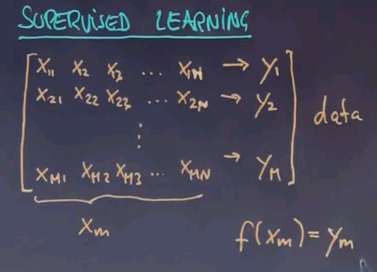

The vast amount of work in the field falls into the area of supervised learning. In supervised learning you're given for each training example a feature vector and a target label named Y. For example, for a credit rating agency X1, X2, X3 might be a feature such as is the person employed? What is the salary of the person? Has the person previously defaulted on a credit card? And so on. And Y is a predictor whether the person is to default on the credit or not. Now machine learning is typically carried out on past data where the credit rating agency might have collected features just like these and actual occurrences of default or not. What it wishes to produce is a function that allows us to predict future customers. So when a new person comes in with a different feature vector, can we predict as good as possible the functional relationship between these features X1 to Xn all the way to Y? You can apply the exact same example in image recognition where X might be pixels of images or it might be features of things found in images and Y might be a label that says whether a certain object is contained in an image or not.

Now in supervised learning you're given many such examples. X21 to X2n leads to Y2 all the way to index m.

This is called your data. If we call each input vector Xm and we wish to find out the function given any Xm or any future vector X produces as close as possible my target signal Y. Now this isn't always possible and sometimes it's acceptable, in fact preferable, to tolerate a certain amount of error in your training data. But the subject of machine learning is to identify this function over here.

And once you identify it you can use it for future Xs that weren't part of the training set to produce a prediction that hopefully is really, really good.

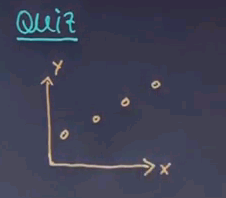

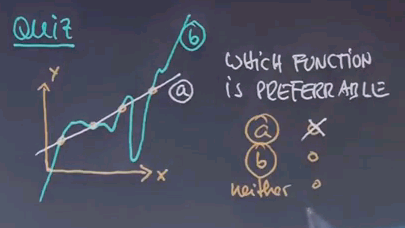

So let me ask you a question. And this is a question for which I haven't given you the answer but I'd like to appeal to your intuition. Here's one data set where the X is one dimensionally plotted horizontally and the Y is vertically and suppose that looks like this.

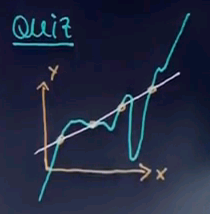

Suppose my machine learning algorithm gives me two hypotheses. One is this function over here which is a linear function and one is this function over here.

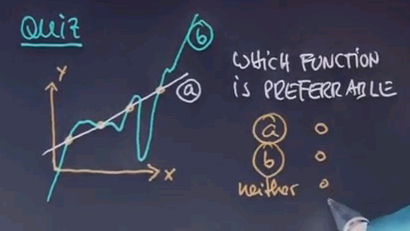

I'd like to know which of the functions you find preferable as an explanation for the data. Is it function A? Or function B?

Occam's Razor

{{#ev:youtubehd|FHJx9RVVKFg}}

And I hope you guessed function A. Even though both perfectly describe the data B is much more complex than A. In fact, outside the data B seems to go to minus infinity much faster than these data points and to plus infinity much faster than with these data points over here. And in between we have wild oscillations that don't correspond to any data. So I would argue A is preferable.

The reason why I asked this question is because of something called Occam's Razor. Occam can be spelled many different ways. And what Occam says is that everything else being equal choose the less complex hypothesis.

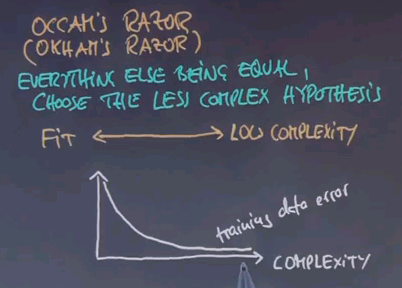

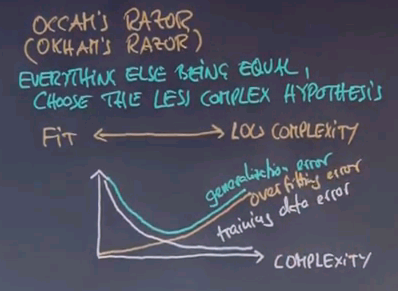

Now in practice there's actually a trade-off between a really good data fit and low complexity. Let me illustrate this to you by a hypothetical example. Consider the following graph where the horizontal axis graphs complexity of the solution. For example, if you use polynomials this might be a high-degree polynomial over here and maybe a linear function over here which is a low-degree polynomial. Your training data error tends to go like this.

The more complex the hypothesis you allow the more you can just fit your data. However, in reality your generalisation error on unknown data tends to go like this. It is the sum of the training data error and another function which is called the overfitting error.

Not surprisingly the best complexity is obtained where the generalisation error is minimum. There are methods to calculate the overfitting error. They go into a statistical field under the name Bayes variance methods. However, in practice you're often just given the training data error. You find if you don't find the model that minimises the training data error but instead pushes back the complexity your algorithm tends to perform better and that is something we will study a little bit in this class.

However, this slide is really important for anybody doing machine learning in practice. If you deal with data and you have ways to fit your data be aware that overfitting is a major source of poor performance of a machine learning algorithm. And I'll give you examples in just one second.